Computers—

THE MACHINES WE THINK WITH

D. S. HALACY, Jr.

HARPER & ROW, PUBLISHERS

NEW YORK, EVANSTON, AND LONDON

COMPUTERS—THE MACHINES WE THINK WITH. Copyright © 1962, by Daniel S. Halacy, Jr. Printed in the United States of America. All rights in this book are reserved. No part of the book may be used or reproduced in any manner whatsoever without written permission except in the case of brief quotations embodied in critical articles and reviews. For information address Harper & Row, Publishers, Incorporated, 49 East 33rd Street, New York 16, N.Y.

| 1. | Computers—The Machines We Think With | 1 |

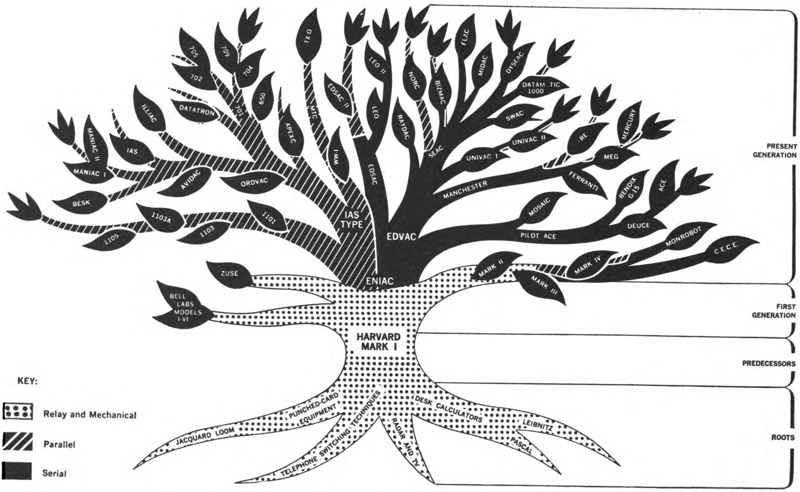

| 2. | The Computer’s Past | 18 |

| 3. | How Computers Work | 48 |

| 4. | Computer Cousins—Analog and Digital | 72 |

| 5. | The Binary Boolean Bit | 96 |

| 6. | The Electronic Brain | 121 |

| 7. | Uncle Sam’s Computers | 147 |

| 8. | The Computer in Business and Industry | 171 |

| 9. | The Computer and Automation | 201 |

| 10. | The Academic Computer | 219 |

| 11. | The Road Ahead | 251 |

While you are reading this sentence, an electronic computer is performing 3 million mathematical operations! Before you read this page, another computer could translate it and several others into a foreign language. Electronic “brains” are taking over chores that include the calculation of everything from automobile parking fees to zero hour for space missile launchings.

Despite bitter winter weather, a recent conference on computers drew some 4,000 delegates to Washington, D.C.; indicating the importance and scope of the new industry. The 1962 domestic market for computers and associated equipment is estimated at just under $3 billion, with more than 150,000 people employed in manufacture, operation, and maintenance of the machines.

In the short time since the first electronic computer made its appearance, these thinking machines have made such fantastic strides in so many different directions that most of us are unaware how much our lives are already being affected by them. Banking, for example, employs complex machines that process checks and handle accounts so much faster than human bookkeepers 2that they do more than an hour’s work in less than thirty seconds.

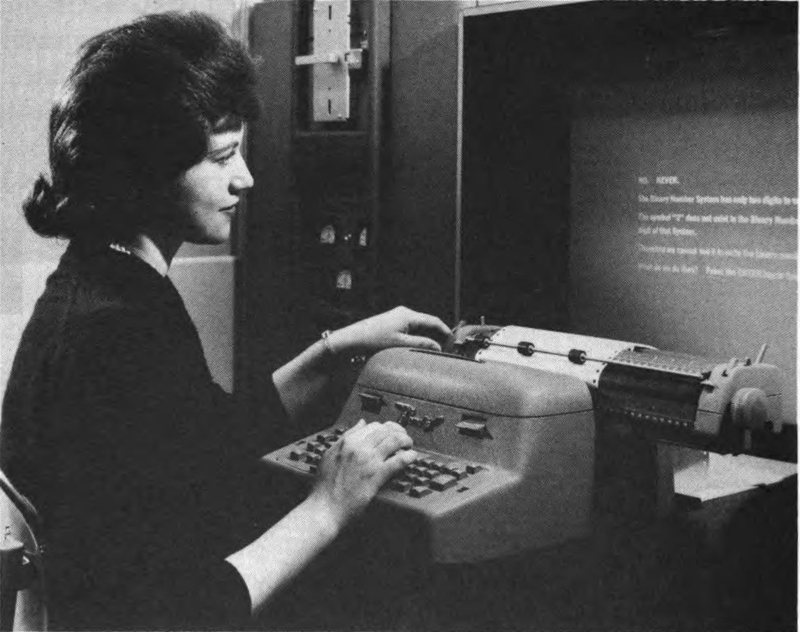

General Electric Co., Computer Dept.

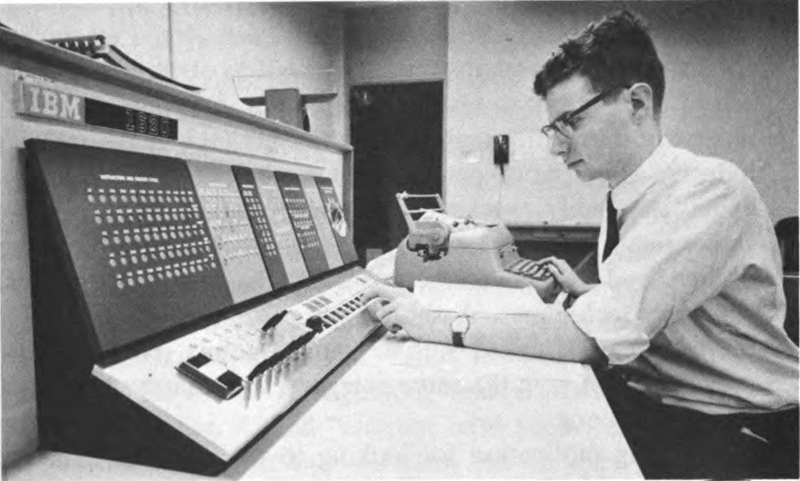

Programmer at console of computer used in electronic processing of bank checking accounts.

Our government is one of the largest users of computers and “data-processing machines.” The census depends on such equipment, and it played a part in the development of early mechanical types of computers when Hollerith invented a punched-card system many years ago. In another application, the post office uses letter readers that scan addresses and sort mail at speeds faster than the human eye can keep up with. Many magazines 3have put these electronic readers to work whizzing through mailing lists.

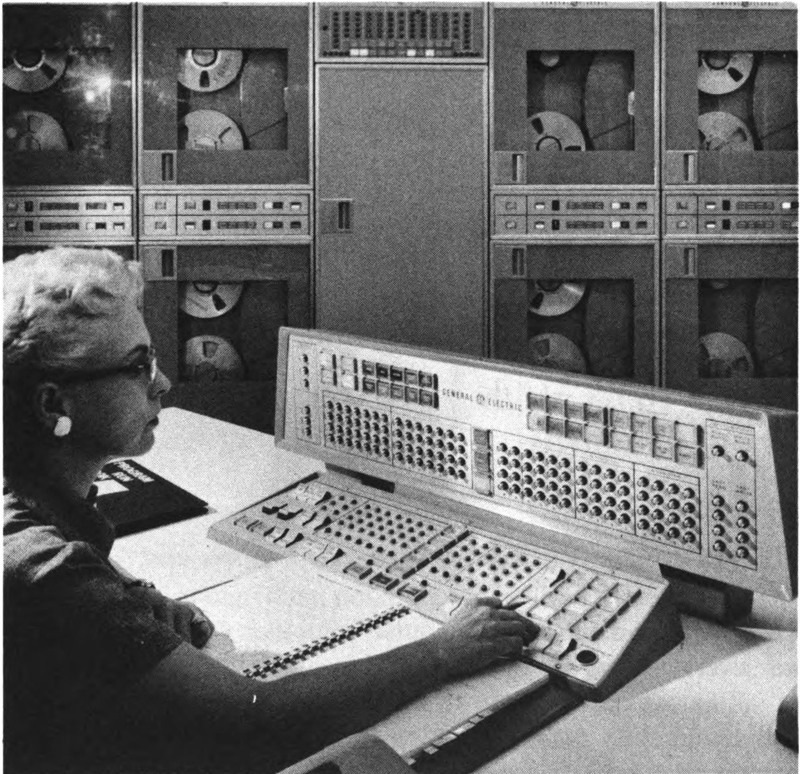

General Electric Co., Computer Dept.

Numbers across bottom of check are printed in magnetic ink and can be read by the computer.

In Sweden, writer Astrid Lindgren received additional royalties for one year of 9,000 kronor because of library loans. Since this was based on 850,000 total loans of her books from thousands of schools and libraries, the bookkeeping was possible only with an electronic computer.

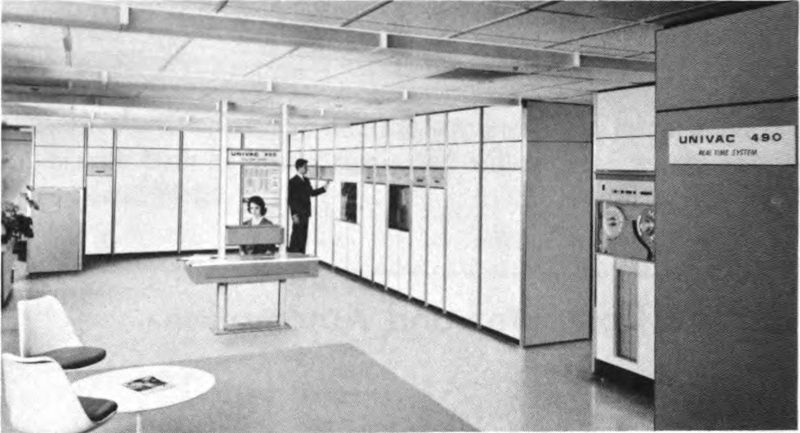

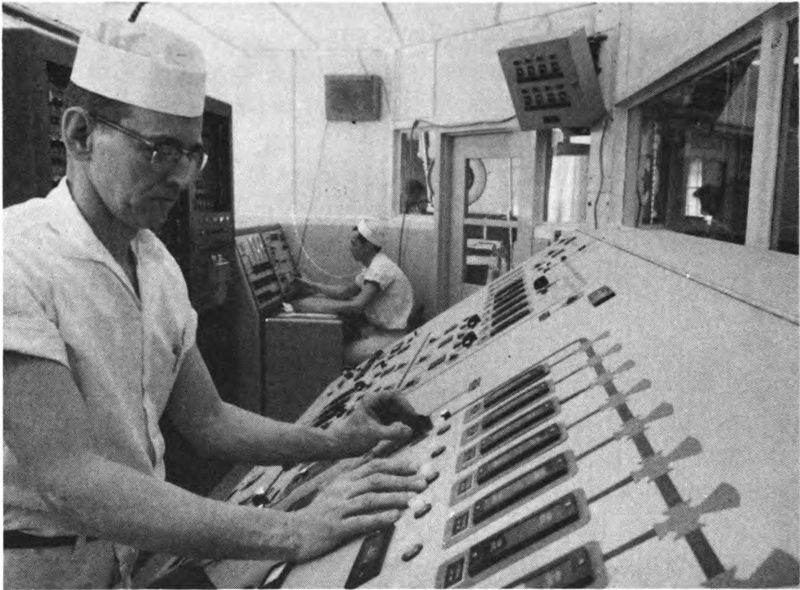

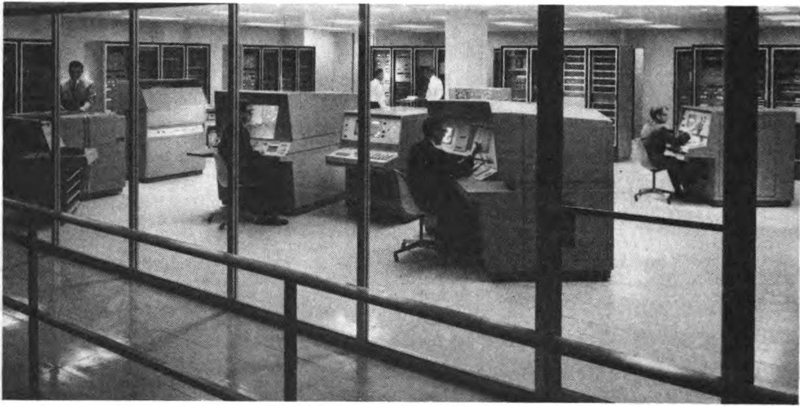

Computers are beginning to take over control of factories, steel mills, bakeries, chemical plants, and even the manufacture of ice cream. In scientific research, computers are solving mathematical and logical problems so complex that they would go forever unsolved if men had to do the work. One of the largest computing systems yet designed, incorporating half a million transistors and millions of other parts, handles ticket reservations for the airlines. Others do flight planning and air traffic control itself.

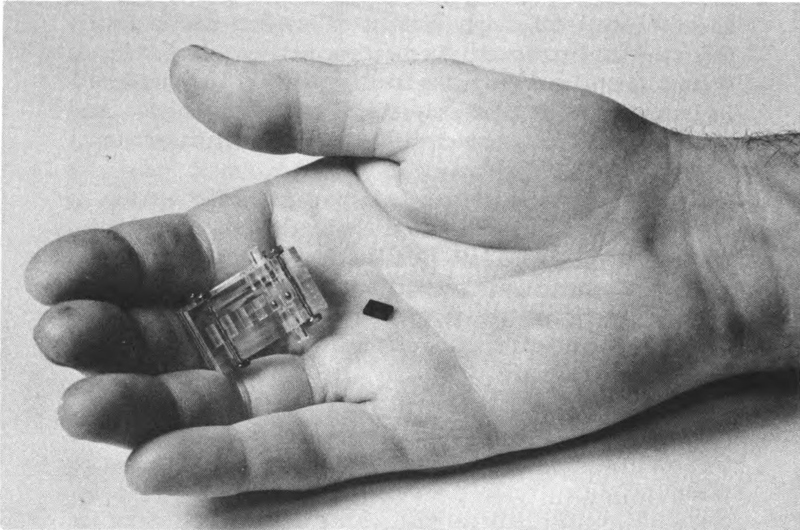

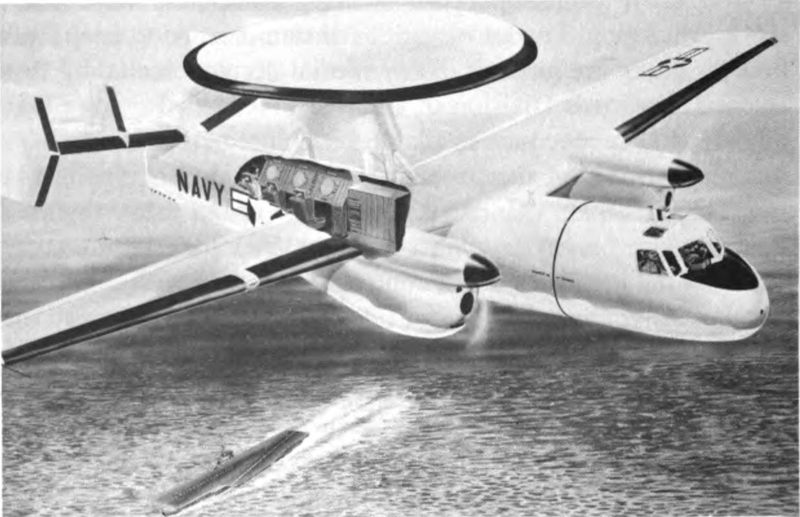

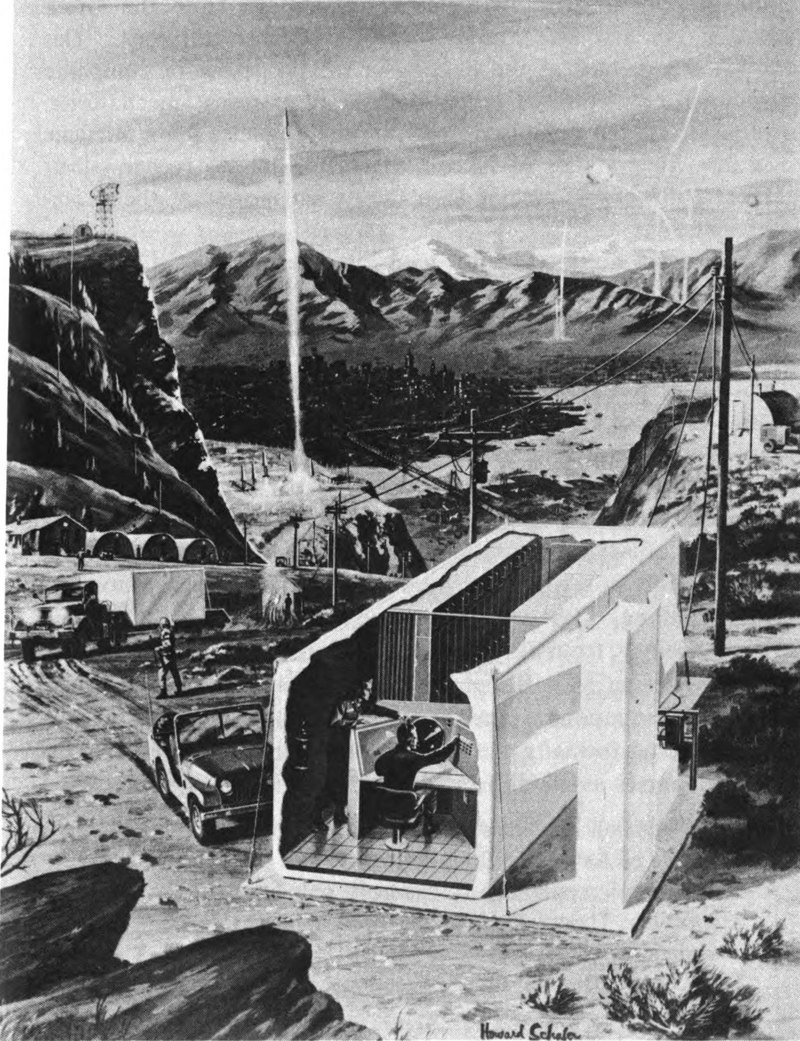

Gigantic computerized air defense systems like SAGE and NORAD help guard us from enemy attack. When John Glenn made his space flight, giant computers on the ground made the vital calculations to bring him safely back. Tiny computers in space vehicles themselves have proved they can survive the shocks of launching and the environment of space. These airborne 4computers make possible the operation of Polaris, Atlas, and Minuteman missiles. Such applications are indicative of the scope of computer technology today; the ground-based machines are huge, taking up rooms and even entire buildings while those tailored for missiles may fit in the palm of the hand. One current military project is such an airborne computer, the size of a pack of cigarettes yet able to perform thousands of mathematical and logical operations a second.

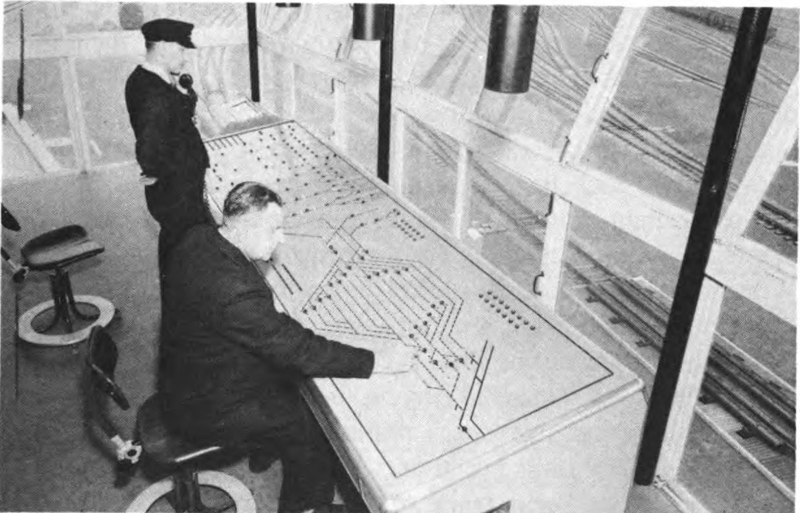

Computers are a vital part of automation, and already they are running production lines and railroads, making mechanical drawings and weather predictions, and figuring statistics for insurance companies as well as odds for gamblers. Electronic machines permit the blind to read a page of ordinary type, and also control material patterns in knitting mills. This last use is of particular interest since it represents almost a full circle in computer science. Oddly, it was the loom that inspired the first punched cards invented and used to good advantage by the French designer Jacquard. These homely forerunners of stored information sparked the science that now returns to control the mills.

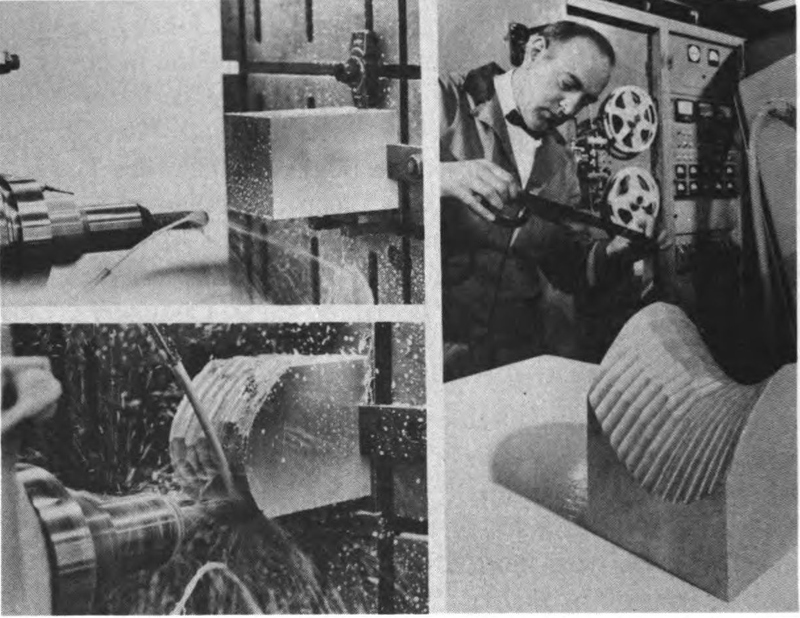

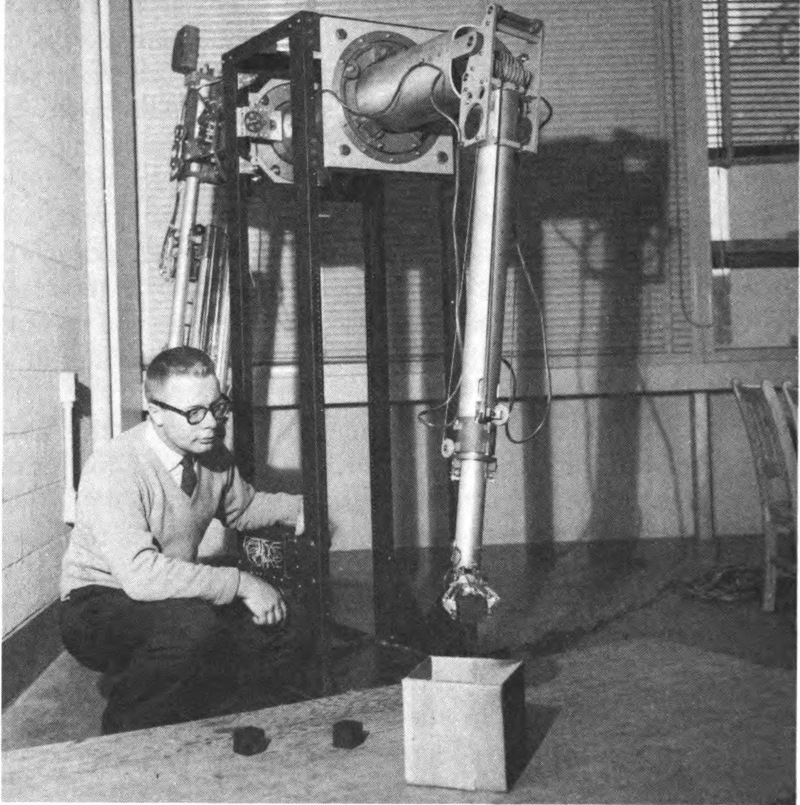

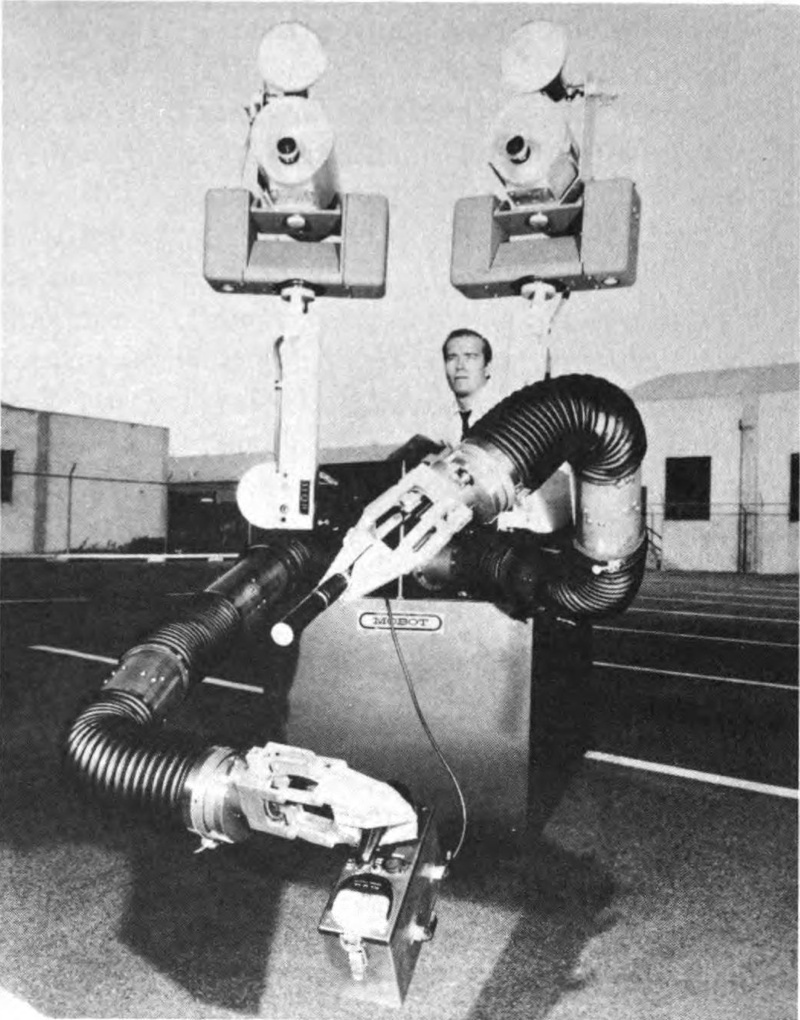

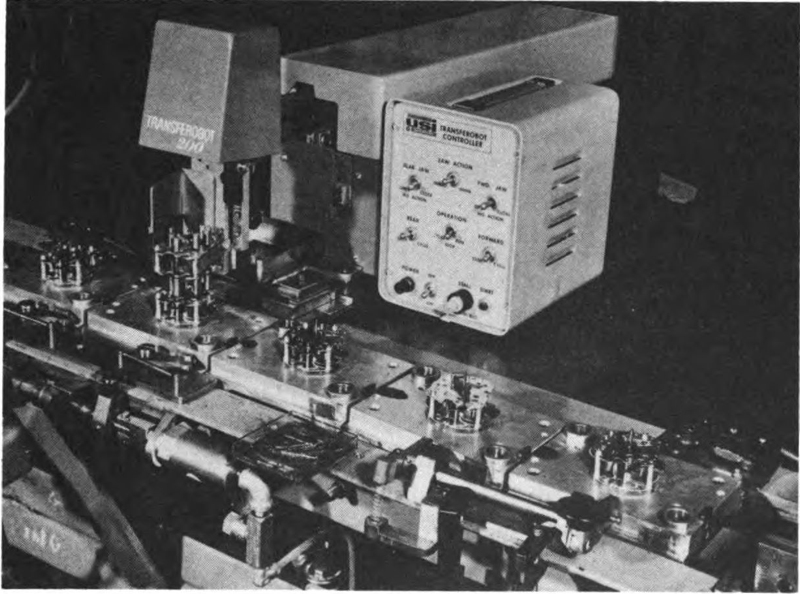

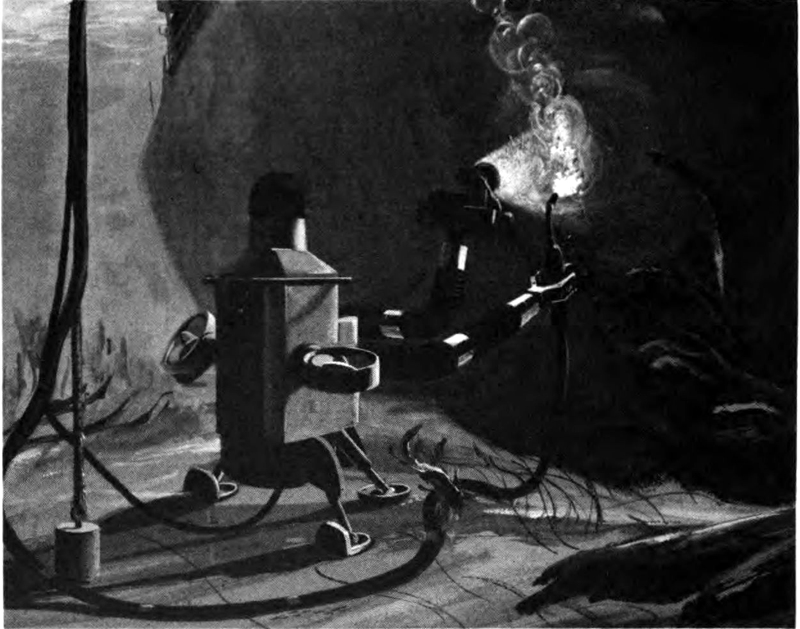

Men very wisely are now letting computers design other computers, and in one recent project a Bell Laboratories computer did a job in twenty-five minutes that would have taken a human designer a month. Even more challenging are the modern-day “robots” performing precision operations in industrial plants. One such, called “Unimate,” is simply guided through the mechanical operations one time, and can then handle the job alone. “TransfeRobot 200” is already doing assembly-line work in dozens of plants.

The hope has been expressed that computer extension of our brainpower by a thousandfold would give our country a lead over potential enemies. This is a rather vain hope, since the United States has no corner on the computer market. There is worldwide interest in computers, and machines are being built in Russia, England, France, Germany, Switzerland, Holland, Sweden, Africa, Japan, and other countries. A remarkable computer in Japan recognizes 8,000 colors and analyzes them instantly. Computer 5translation from one language to another has been mentioned, and work is even being done on machines that will permit us to speak English into a phone in this country and have it come out French, or whatever we will, overseas! Of course, computers have a terminology all their own too; words like analog and digital, memory cores, clock rates, and so on.

The broad application of computers has been called the “second industrial revolution.” What the steam engine did for muscles, the modern computer is beginning to do for our brains. In their slow climb from caveman days, humans have encountered ever more problems; one of the biggest of these problems eventually came to be merely how to solve all the other problems.

At first man counted on his fingers, and then his toes. As the problems grew in size, he used pebbles and sticks, and finally beads. These became the abacus, a clever calculating device still in constant use in many parts of the world. Only now, with the advent of low-cost computers, are the Japanese turning from the soroban, their version of the abacus.

The large-scale computers we are becoming familiar with are not really as new as they seem. An Englishman named Babbage built what he called a “difference engine” way back in 1831. This complex mechanical computer cost a huge sum even by today’s standards, and although it was never completed to Babbage’s satisfaction, it was the forerunner and model for the successful large computers that began to appear a hundred years later. In the meantime, of course, electronics has come to the aid of the designer. Today, computer switches operate at billionths-of-a-second speeds and thus make possible the rapid handling of quantities of work like the 14 billion checks we Americans wrote in 1961.

There are dozens of companies now in the computer manufacturing field, producing a variety of machines ranging in price from less than a hundred dollars total price to rental fees of $100,000 a month or more. Even at these higher prices the big problem of some manufacturers is to keep up with demand. A $1 billion market in 1960, the computer field is predicted to 6climb to $5 billion by 1965, and after that it is anyone’s guess. Thus far all expert predictions have proved extremely conservative.

The path of computer progress is not always smooth. Recently a computer which had been installed on a toll road to calculate charges was so badly treated by motorists it had to be removed. Another unfortunate occurrence happened on Wall Street. A clever man juggled the controls of a large computer used in stock-market work and “made” himself a quarter of a million dollars, though he ultimately landed in jail for his illegal computer button pushing. Interestingly, there is one corrective institution which already offers a course in computer engineering for its inmates.

So great is the impact of computers that lawyers recently met for a three-day conference on the legal aspects of the new machines. Points taken up included: Can business records on magnetic tape or other storage media be used as evidence? Can companies be charged with mismanagement for not using computers in their business? How can confidential material be handled satisfactorily on computers?

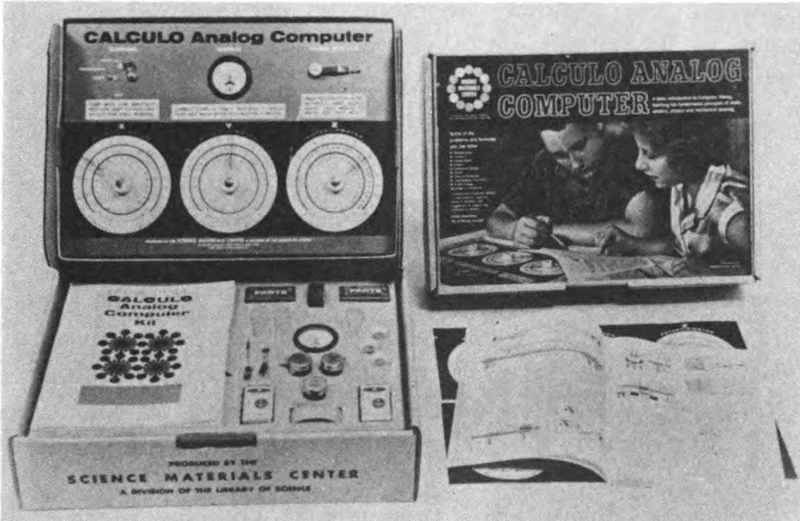

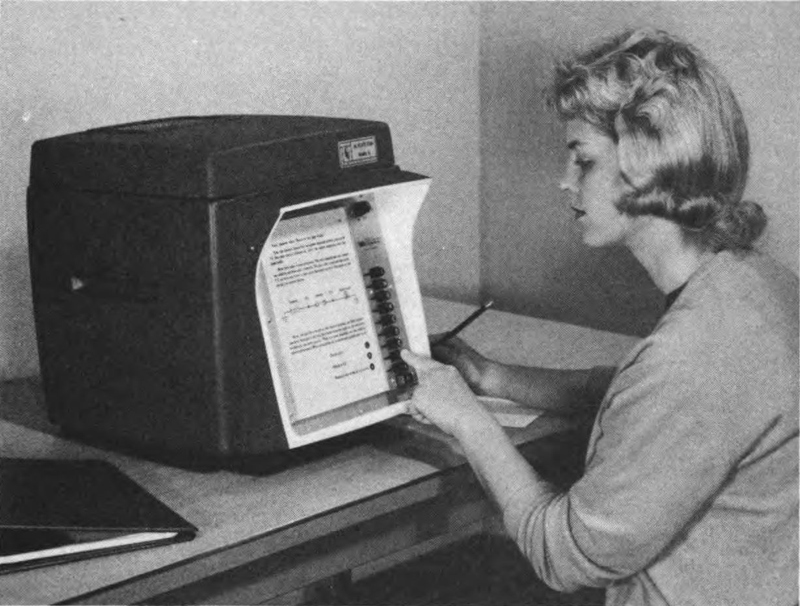

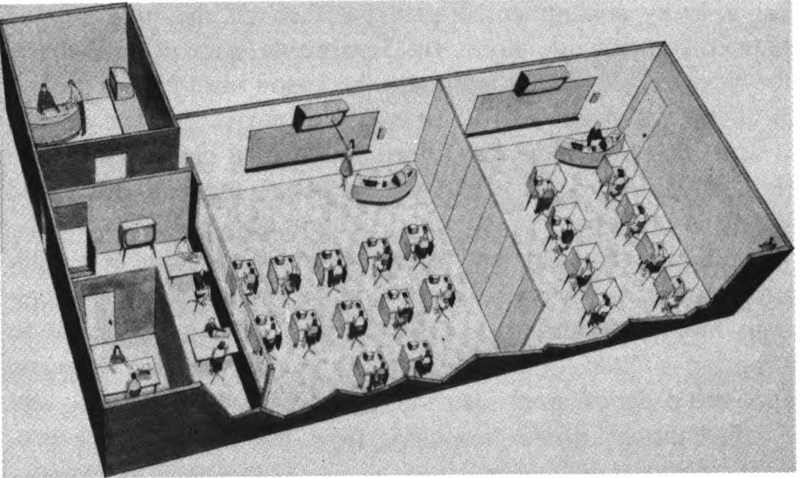

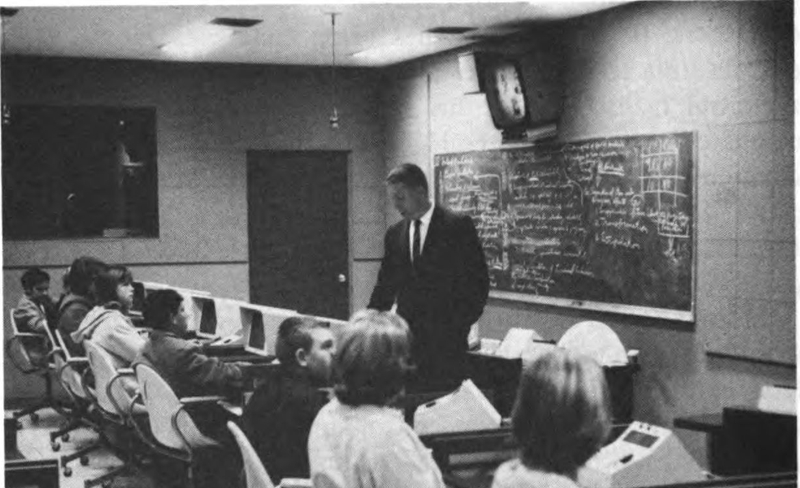

Along with computing machines a whole new technology is growing. Universities and colleges—even high schools—are teaching courses in computers. And the computer itself is getting into the teaching business too. The “teaching machine” is one of the most challenging computer developments to come along so far. These mechanical professors range from simple “programmed” notebooks, such as the Book of Knowledge and Encyclopedia Britannica are experimenting with, to complex computerized systems such as that developed by U.S. Industries, Inc., for the Air Force and others.

The computer as a teaching machine immediately raises the question of intelligence, and whether or not the computer has any. Debate waxes hot on this subject; but perhaps one authority was only half joking when he said that the computer designer’s competition was a unit about the size of a grapefruit, using only a tenth of a volt of electricity, with a memory 10,000 times 7as extensive as any existing electronic computer. This is a brief description of the human brain, of course.

When the first computers appeared, those like ENIAC and BINAC, fiction writers and even some science writers had a field day turning the machines into diabolical “brains.” Whether or not the computer really thinks remains a controversial question. Some top scientists claim that the computer will eventually be far smarter than its human builder; equally reputable authorities are just as sure that no computer will ever have an original thought in its head. Perhaps a safe middle road is expressed with the title of this book; namely that the machine is simply an extension of the human brain. A high-speed abacus or slide rule, if you will; accurate and foolproof, but a moron nonetheless.

There are some interesting machine-brain parallels, of course. Besides its ability to do mathematics, the computer can perform logical reasoning and even make decisions. It can read and translate; remembering is a basic part of its function. Scientists are now even talking of making computers “dream” in an attempt to come up with new ideas!

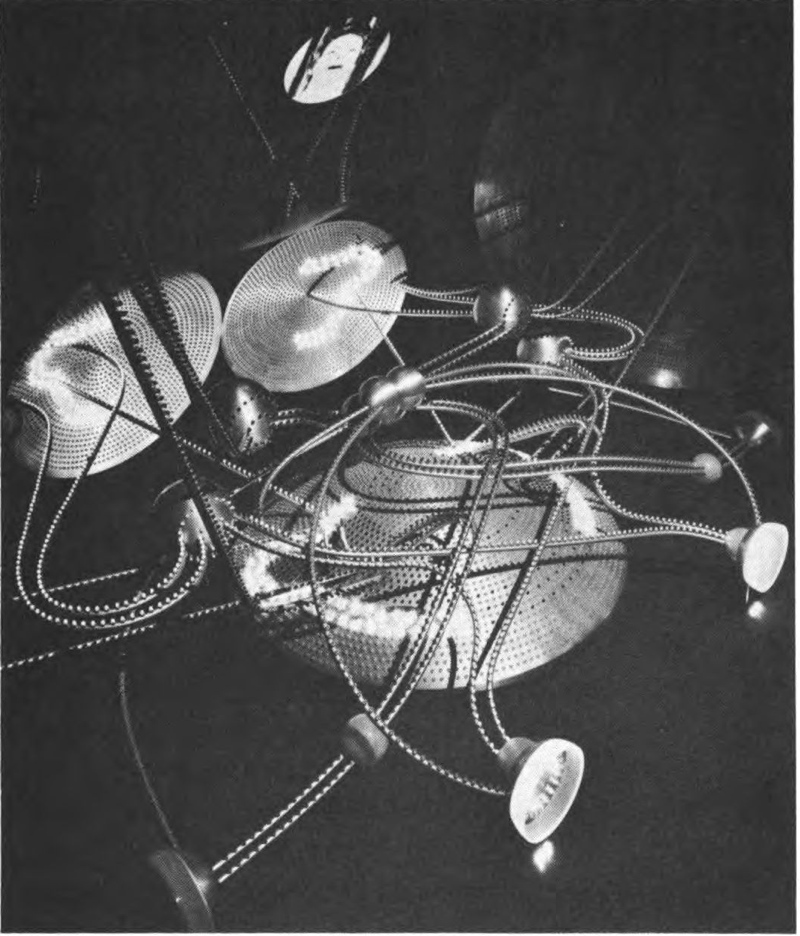

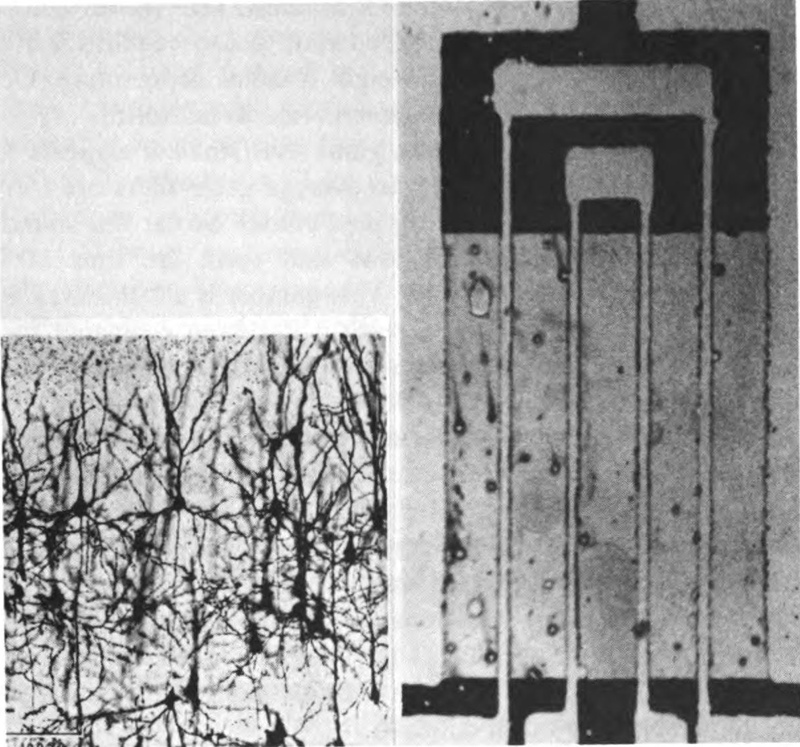

More similarities are being discovered or suggested. For instance, the interconnections in a computer are being compared with, and even crudely patterned after, the brain’s neurons. A new scientific discipline, called “bionics,” concerns itself with such studies. Far from being a one-way street, bionics works both ways so that engineers and biologists alike benefit. In fact, some new courses being taught in universities are designed to “bridge the gap between engineering and biology.”

At one time the only learning a computer had was “soldered in”; today the machines are being “forced” to learn by the application of punishment or reward as necessary. “Free” learning in computers of the Perceptron class is being experimented with. These studies, and statements like those of renowned scientist Linus Pauling that he expects a “molecular theory” of learning in human beings to be developed, are food for thought 8as we consider the parallels our electronic machines share with us. Psychologists at the University of London foresee computers not only training humans, but actually watching over them and predicting imminent nervous breakdowns in their charges!

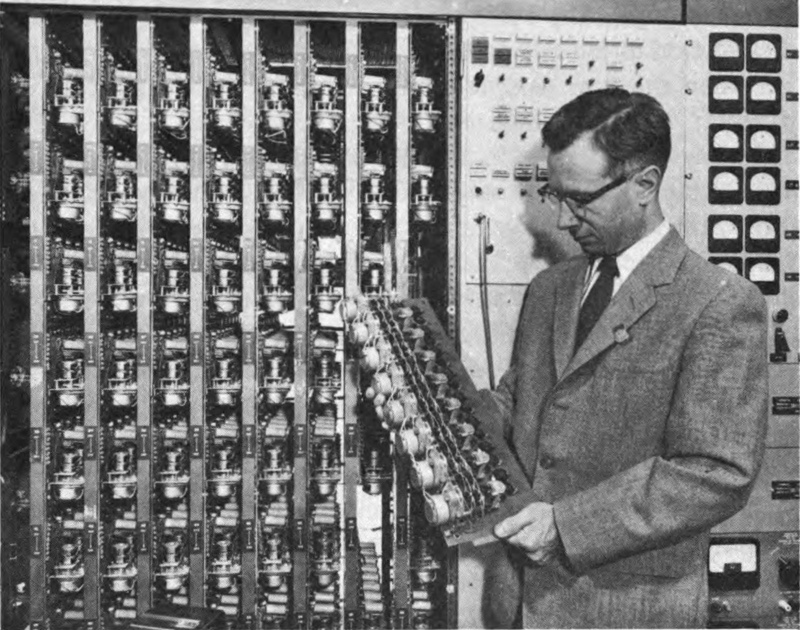

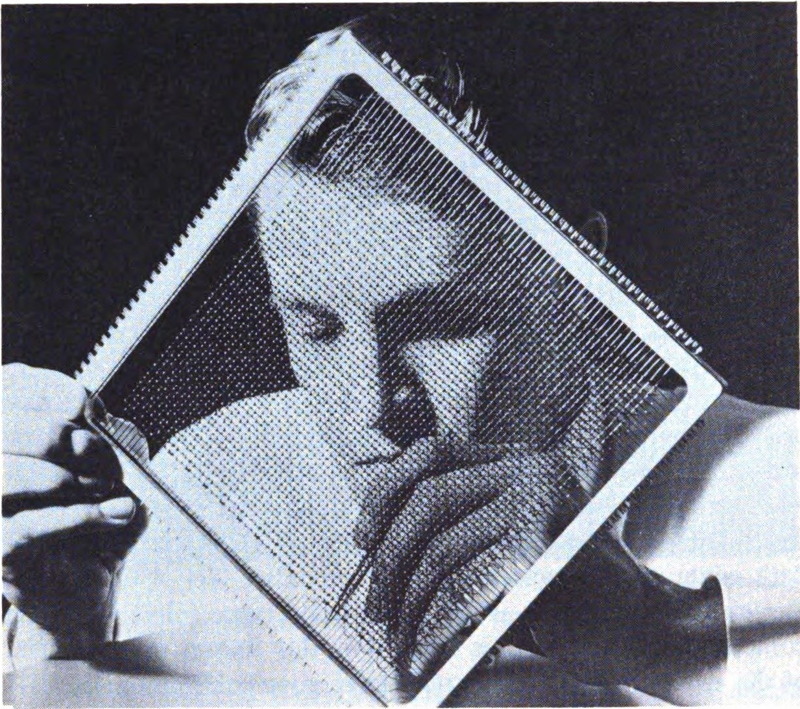

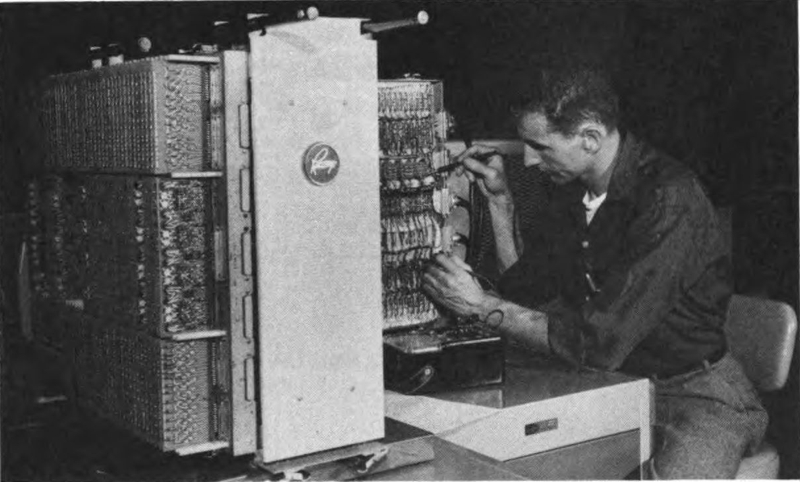

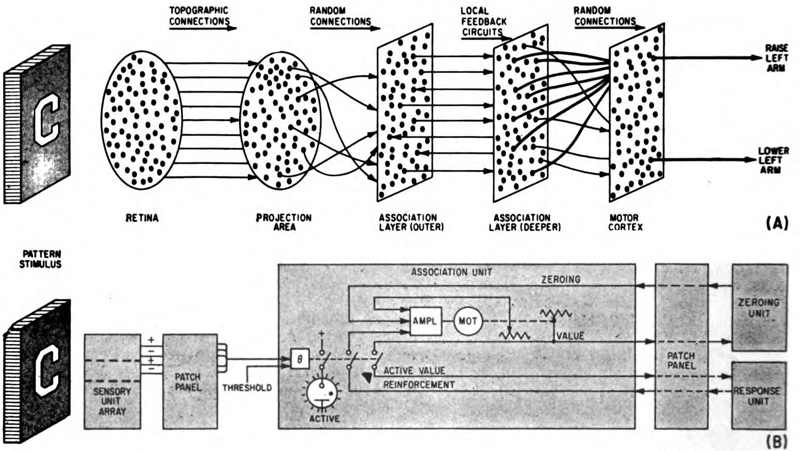

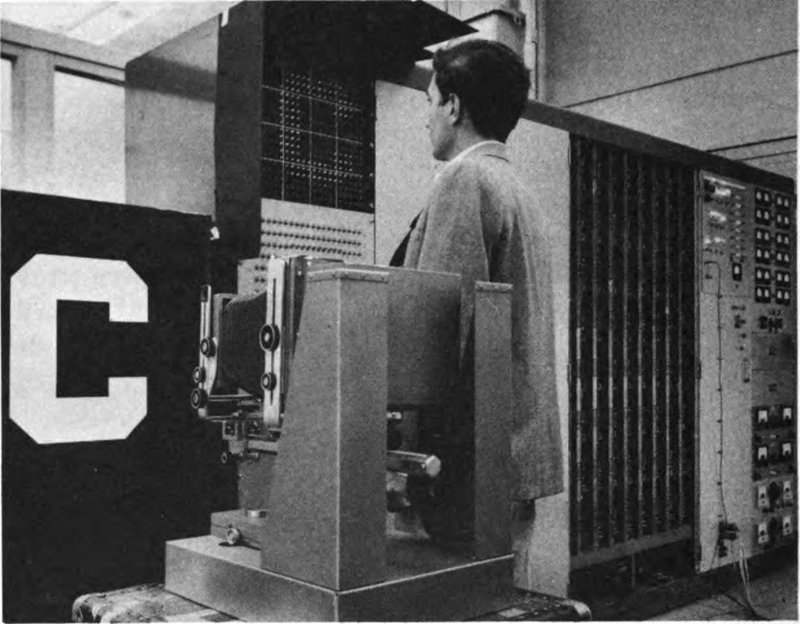

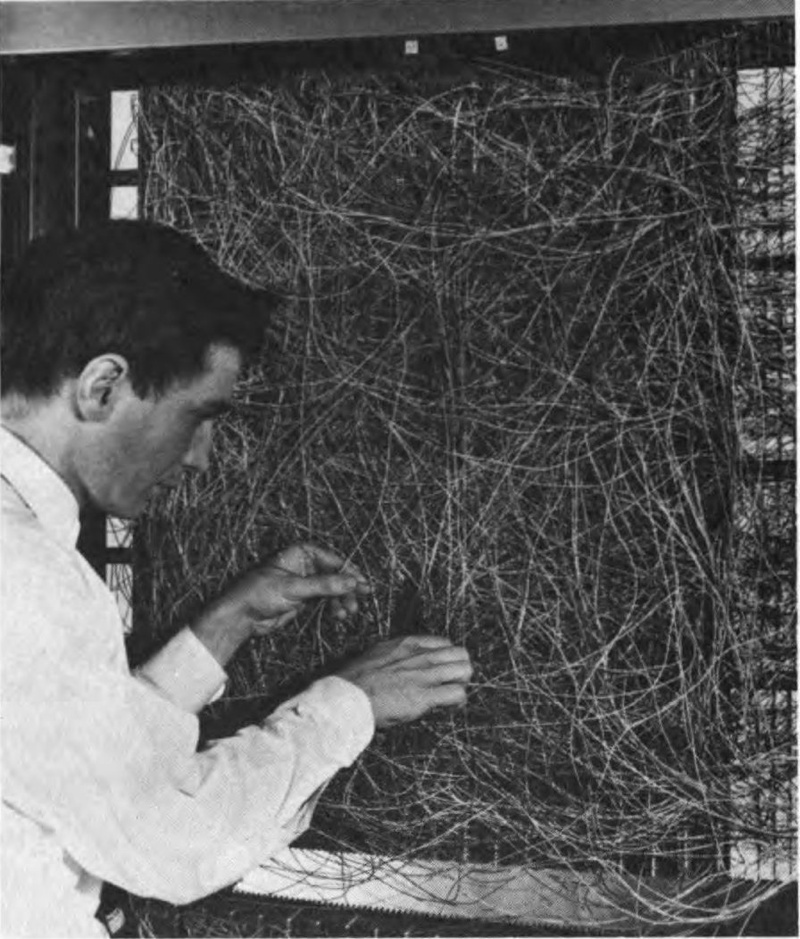

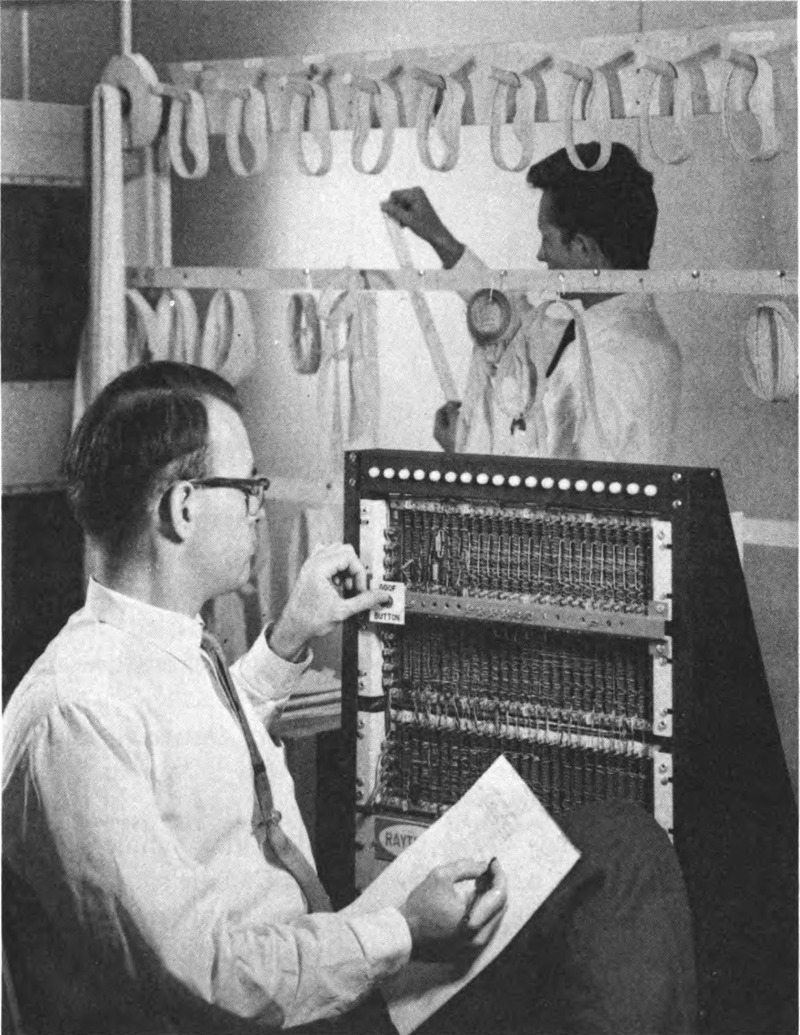

Cornell Aeronautical Laboratory

Bank of “association” units in Mark I Perceptron, a machine that “learns” from experience.

To demonstrate their skill many computers play games of tick-tack-toe, checkers, chess, Nim, and the like. A simple electromechanical computer designed for young people to build can be programmed to play tick-tack-toe expertly. Checker- and chess-playing computers are more sophisticated, many of them learning as they play and capable of an occasional move classed as brilliant by expert human players. The IBM 704 computer has been programmed to inspect the results of its possible decisions several moves ahead and to select the best choice. At the end of the game it prints out the winner and thanks its opponent 9for the game. Rated as polite, but only an indifferent player by experts, the computer is much like the checker-playing dog whose master scoffed at him for getting beaten three games out of five. Chess may well be an ultimate challenge for any kind of brain, since the fastest computer in operation today could not possibly work out all the possible moves in a game during a human lifetime!

As evidenced in the science-fiction treatment early machines got, the first computers were monsters at least in size. Pioneering design efforts on machines with the capacity of the brain led to plans for something roughly the size of the Pentagon, equipped with its own Niagara for power and cooling, and a price tag the world couldn’t afford. As often seems to happen when a need arises, though, new developments have come along to offset the initial obstacles of size and cost.

One such development was the transistor and other semiconductor devices. Tiny and rugged, these components require little power. With the old vacuum-tubes replaced, computers shrank immediately and dramatically. On the heels of this micro-miniaturization have come new and even smaller devices called “ferrite cores” and “cryotrons” using magnetism and supercold temperatures instead of conventional electronic techniques.

As a result, an amazing number of parts can be packed into a tiny volume. So-called “molecular electronics” now seems to be a possibility, and designers of computers have a gleam in their eyes as they consider progress being made toward matching the “packaging density” of the brain. This human computer has an estimated 100 billion parts per cubic foot!

We have talked of reading and translating. Some new computers can also accept voice commands and speak themselves. Others furnish information in typed or printed form, punched cards, or a display on a tube or screen.

Like us, the computer can be frustrated by a task beyond its capabilities. A wrong command can set its parts clicking rapidly but in futile circles. Early computers, for example, could be panicked by the order to divide a number by zero. The 10solution to that problem of course is infinity, and the poor machine had a hard time trying to make such an answer good.

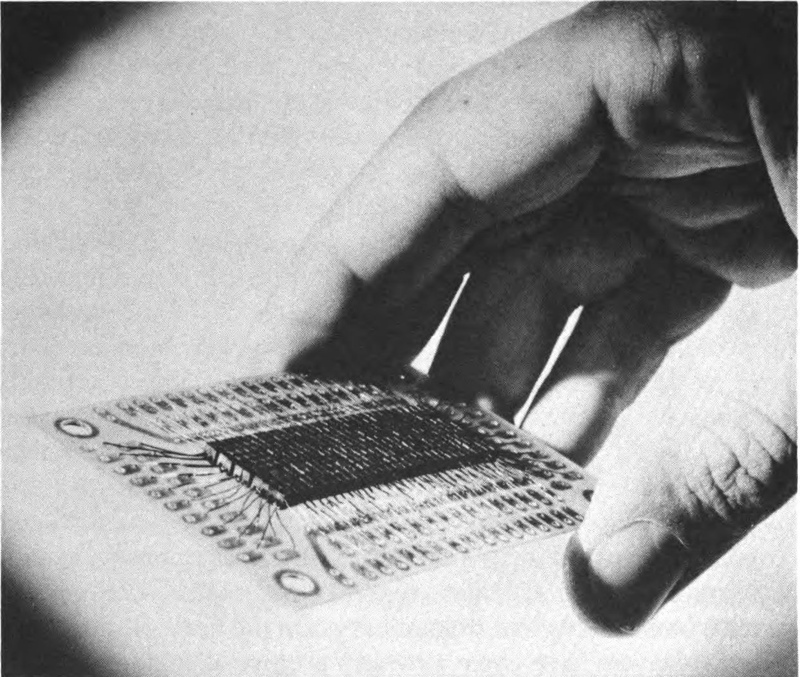

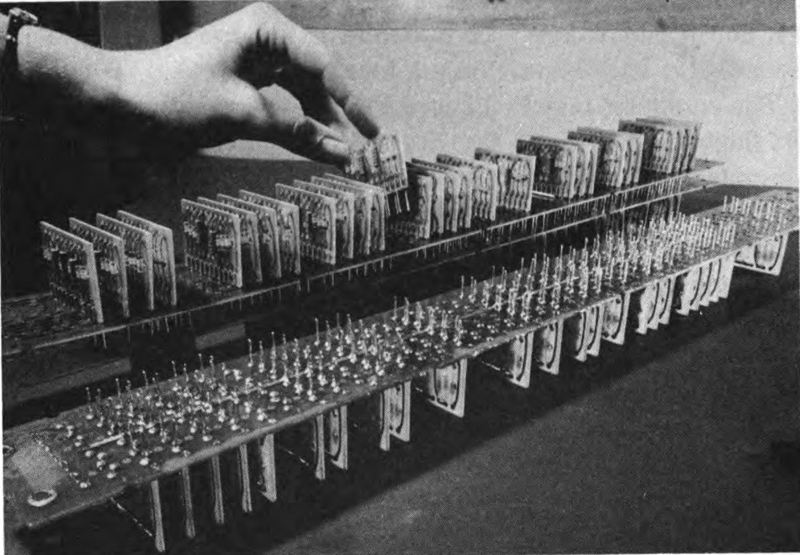

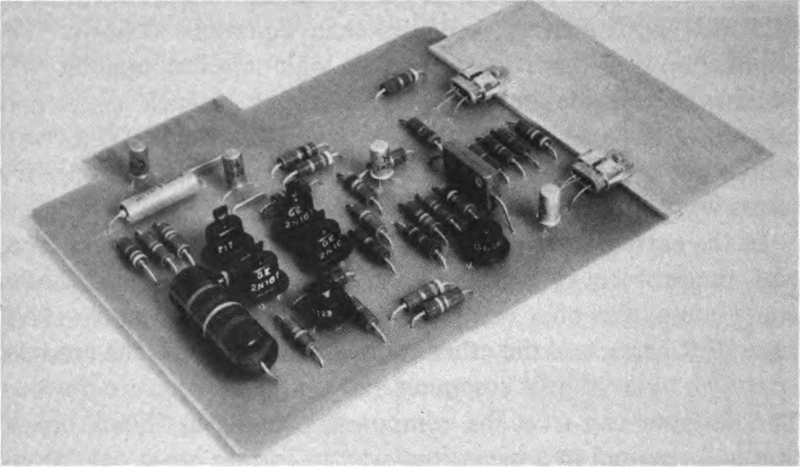

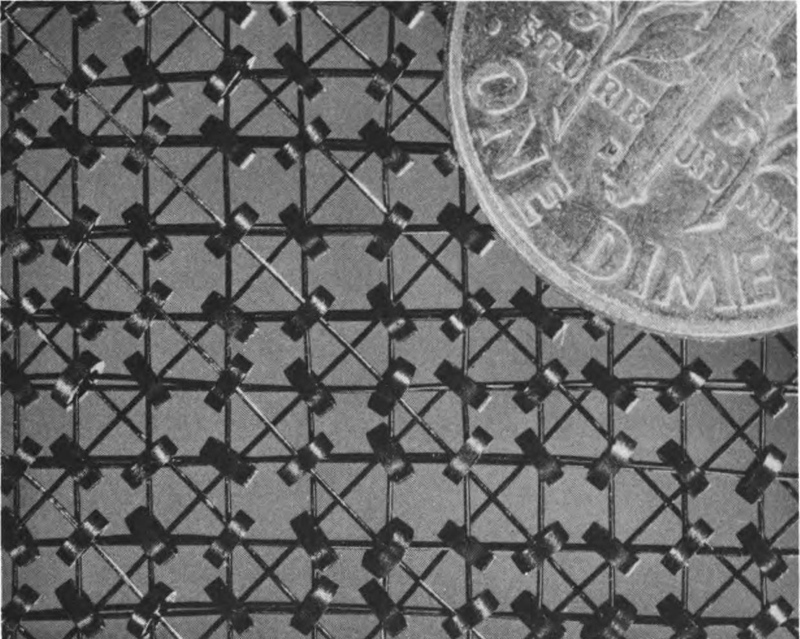

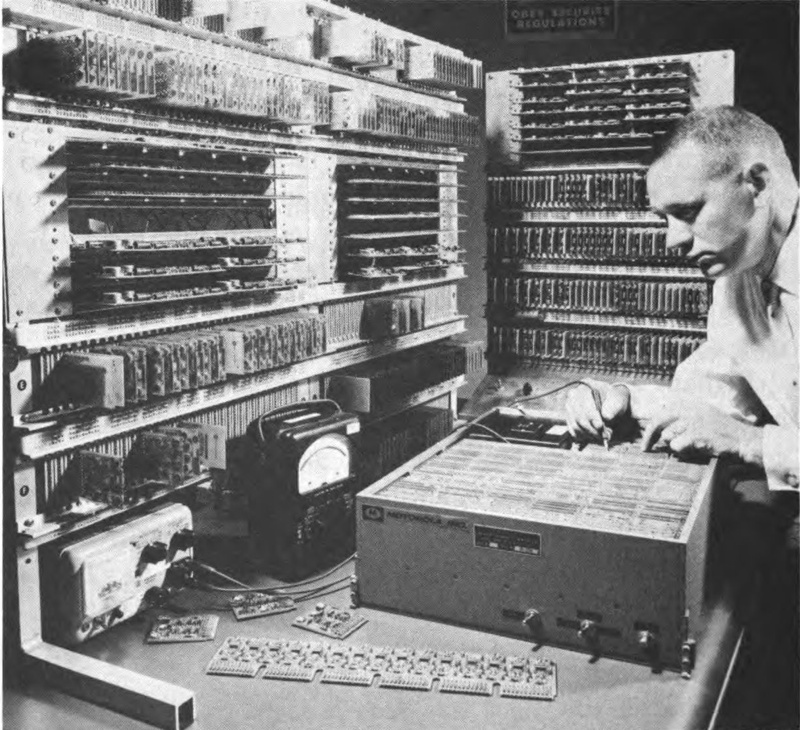

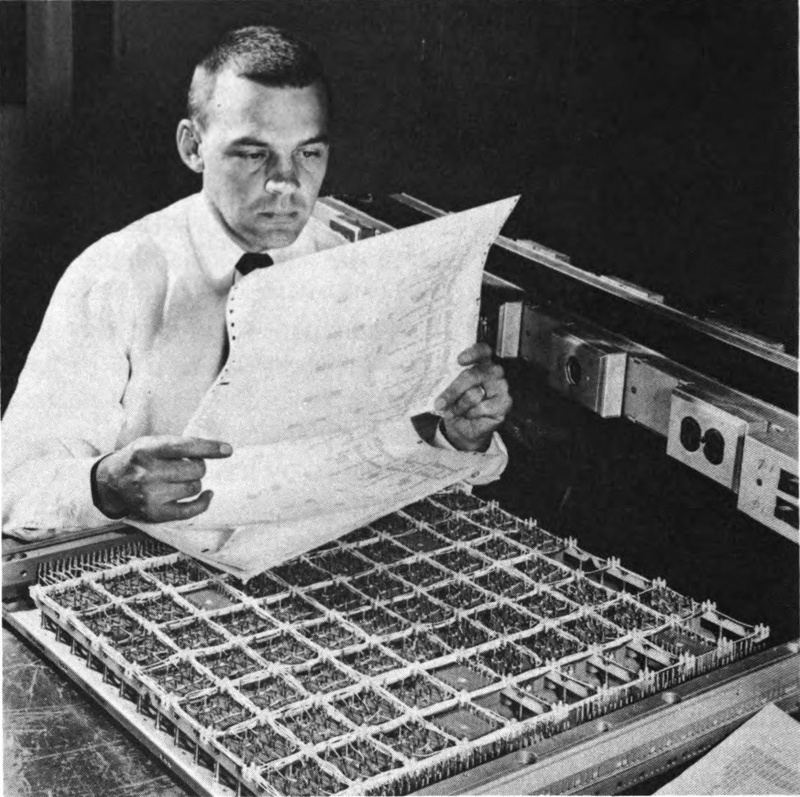

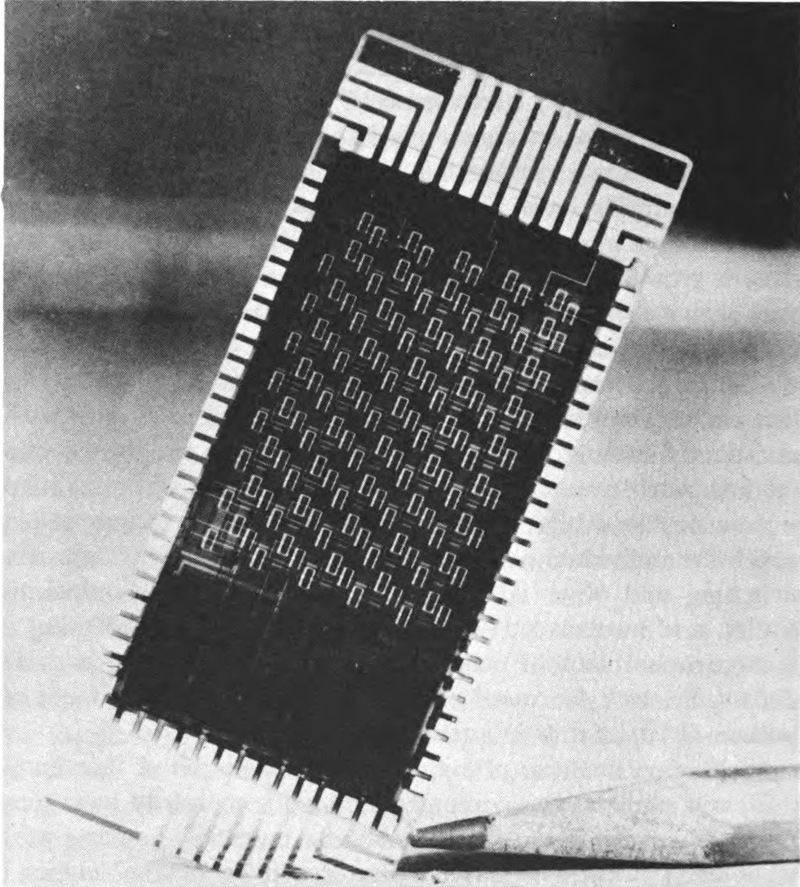

Aeronutronic Division, Ford Motor Co.

This printed-circuit card contains more than 300 BIAX memory elements. Multiples of such cards mounted in computers store large amounts of information.

There are other, quainter stories like that of the pioneer General Electric computer that simply could not function in the dark. All day long it hummed efficiently, but problems left with it overnight came out horribly botched for no reason that engineers could discover. At last it was found that a light had to be left burning with the scary machine! Neon bulbs in the computer were enough affected by light and darkness that the delicate electronic balance of the machine had been upset.

Among the computer’s unusual talents is the ability to compose 11music. Such music has been published and is of a quality to give rise to thoughtful speculation that perhaps great composers are simply good selectors of music. In other words, all the combinations of notes and meter exist: the composer just picks the right ones. No less an authority than Aaron Copland suggests that “we’ll get our new music by feeding information into an electronic computer.” Not content with merely writing music, some computers can even play a tune. At Christmas time, carols are rendered by computers specially programmed for the task. The result is not unlike a melody played on a pipe organ.

In an interesting switch of this musical ability on the part of the machine, Russian engineers check the reliability of their computers by having them memorize Mozart and Grieg. Each part of the complex machines is assigned a definite musical value, and when the composition is “played back” by the computer, the engineer can spot any defects existing in its circuitry. Such computer maintenance would seem to be an ideal field for the music lover.

In a playful mood, computers match pennies with visitors, explain their inner workings as they whiz through complex mathematics, and are even capable of what is called heuristic reasoning. This amounts to playing hunches to reach short-cut solutions to otherwise unsolvable problems. A Rand Corporation computer named JOHNNIAC demonstrated this recently. It was given some basic axioms and asked to prove some theorems. JOHNNIAC came up with the answers, and in one case produced a proof that was simpler than that given in the text. As one scientist puts it, “If computers don’t really think, they at least put on a pretty creditable imitation of the real thing.”

Computers are here to stay; this has been established beyond doubt. The only question remaining is how fast the predictions made by dreamers and science-fiction writers—and now by sober scientists—will come to be a reality. When we consider that in the few years since the 1953 crop of computers, their capacity 12and speed has been increased more than fiftyfold, and is expected to jump another thousandfold in two years, these dreams begin to sound more and more plausible.

One quite probable use for computers is medical diagnosis and prescription of treatment. Electronic equipment can already monitor an ailing patient, and send an alarm when help is needed. We may one day see computers with a built-in bedside manner aiding the family doctor.

The accomplished inroads of computing machines in business are as nothing to what will eventually take place. Already computer “game-playing” has extended to business management, and serious executives participate to improve their administrative ability. We speak of decision-making machines; business decisions are logical applications for this ability. Computers have been given the job of evaluating personnel and assigning salaries on a strictly logical basis. Perhaps this is why in surveys questioning increased use of the machines, each executive level in general tends to rate the machine’s ability just below its own.

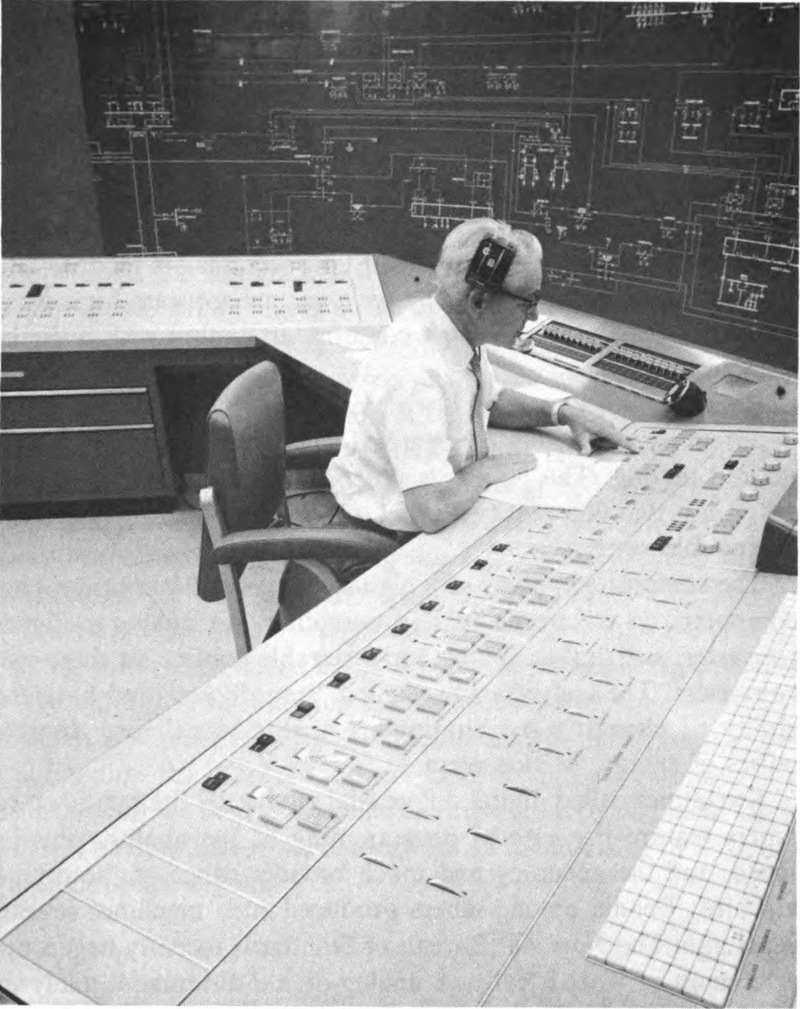

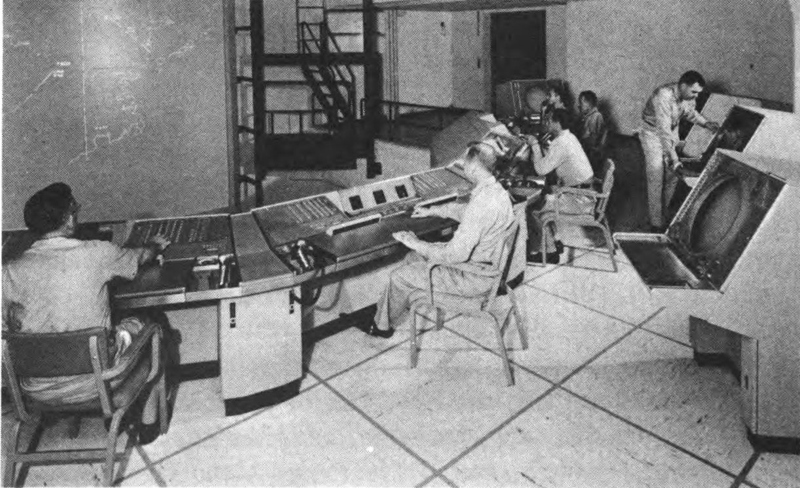

Other games played by the computer are war games, and computers like SAGE are well known. This system not only monitors all air activity but also makes decisions, assigns targets, and then even flies the interceptor planes and guided missiles on their missions. Again in the sky, the increase of commercial air traffic has perhaps reached the limit of human ability to control it. Computers are beginning to take over here too, planning flights and literally flying the planes.

Surface transport can also be computer-controlled. Railroads are beginning to use the computer techniques, and automatic highways are inevitable. Ships also benefit, and special systems coupled to radar can predict courses and take corrective action when necessary.

Men seem to have temporarily given up trying to control the weather, but using computers, meteorologists can take the huge mass of data from all over the world and make predictions rapidly enough to be of use.

We have talked of the computer’s giant strides in banking. 13Its wide use in stores is not far off. An English computer firm has designed an automatic supermarket that assembles ordered items, prices them, and delivers them to the check stand. At the same time it keeps a running inventory, price record, and profit and loss statement, besides billing the customer with periodic statements. The storekeeper will have only to wash the windows and pay his electric power bill.

Even trading stamps may be superseded by computer techniques that keep track of customer purchases and credit him with premiums as he earns them. Credit cards have helped pioneer computer use in billing; it is not farfetched to foresee the day when we are issued a lifetime, all-inclusive credit card—perhaps with our birth certificate!—a card with our thumbprint on it, that will buy our food, pay our rent and utilities and other bills. A central computer system will balance our expenses against deposits and from time to time let us know how we stand financially.

As with many other important inventions, the computer and its technology were spurred by war and are aided now by continuing threats of war. It is therefore pleasant to think on the possibilities of a computer system “programmed” for peace: a gigantic, worldwide system whose input includes all recorded history of all nations, all economic and cultural data, all weather information and other scientific knowledge. The output of such a machine hopefully would be a “best plan” for all of us. Such a computer would have no ax to grind and no selfish interests unless they were fed into it.

Given all the facts, it would punch out for us a set of instructions that would guarantee us the best life possible. This has long been a dream of science writers. H. G. Wells was one of these, suggesting a world clearinghouse of information in his book World Brain written in the thirties. In this country, scientist Vannevar Bush suggested a similar computer called “Memex” which could store huge amounts of data and answer questions put to it.

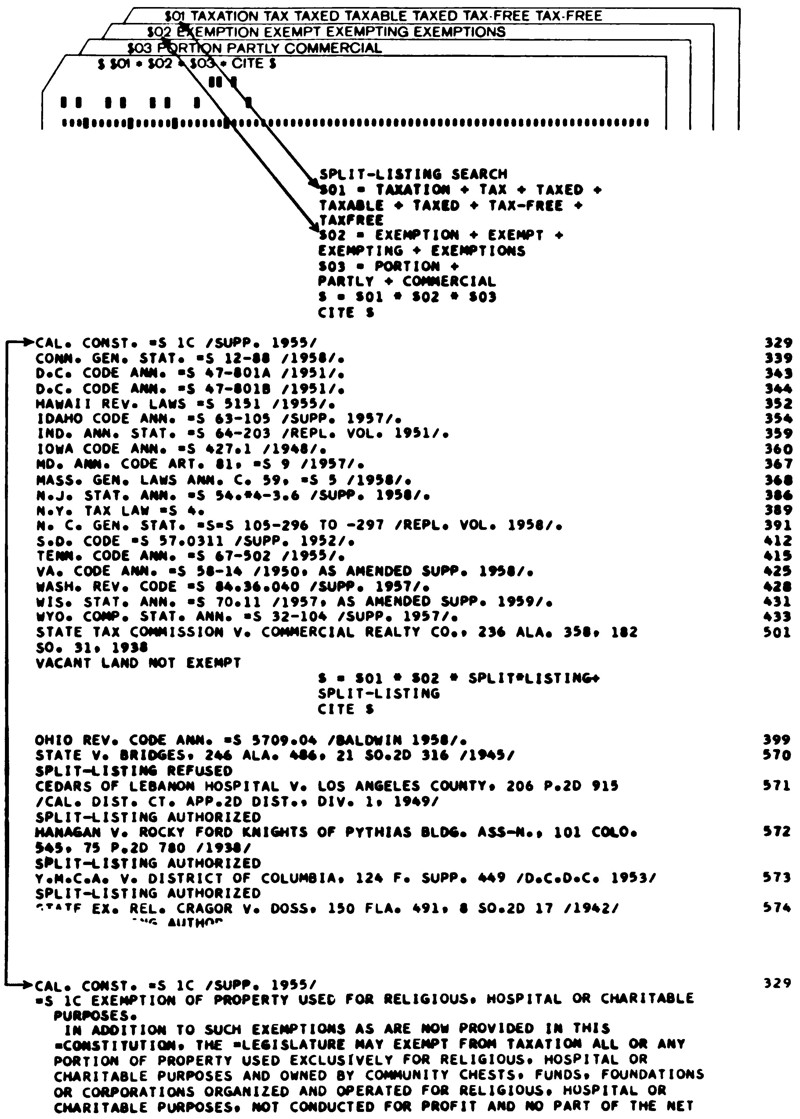

The huge amounts of information—books, articles, speeches, 14and records of all sorts—are beginning to make it absolutely necessary for an efficient information retrieval system. Many cases have been noted in which much time and effort are spent on a project which has already been completed but then has become lost in the welter of literature crammed into libraries. The computer is a logical device for such work; in a recent test such a machine scored 86 per cent in its efforts to locate specific data on file. Trained workers rated only 38 per cent in the same test!

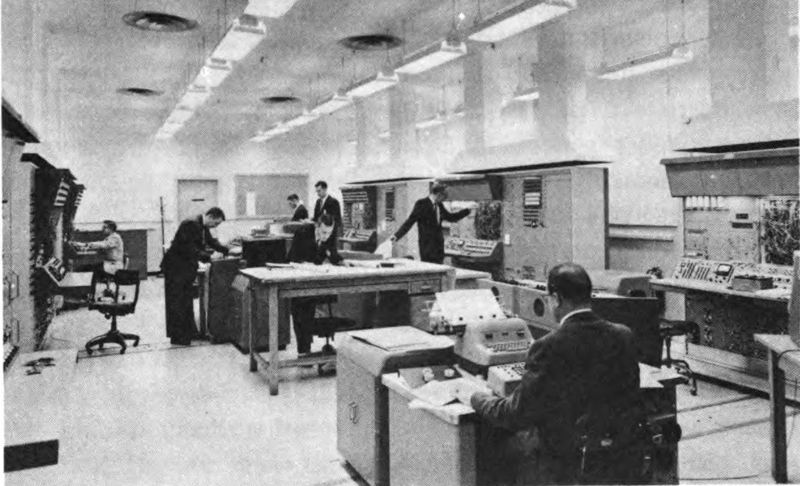

The Boeing Co.

Engineers using computers to solve complex problems in aircraft design.

The science of communication is advancing along with that of computers, and can help make the dream of a worldwide “brain” come true. Computers in distant cities are now linked by telephone lines or radio, and high-speed techniques permit the transmission of many thousands of words per second across these “data links.” An interesting sidelight is the fact that an ailing computer can be hooked by telephone line with a repair center many miles away and its ailments diagnosed by remote control. Communications satellites that are soon to be dotting the sky like tiny moons may well play a big part in computing 15systems of the future. Global weather prediction and worldwide coordination of trade immediately come to mind.

While we envision such far-reaching applications, let’s not lose sight of the possibilities for computer use closer to home—right in our homes, as a matter of fact. Just as early inventors of mechanical power devices did not foresee the day when electric drills and saws for hobbyist would be commonplace and the gasoline engine would do such everyday chores as cutting the grass in our yards, the makers of computers today cannot predict how far the computer will go in this direction. Perhaps we may one day buy a “Little Dandy Electro-Brain” and plug it into the wall socket for solving many of the everyday problems we now often guess wrong on.

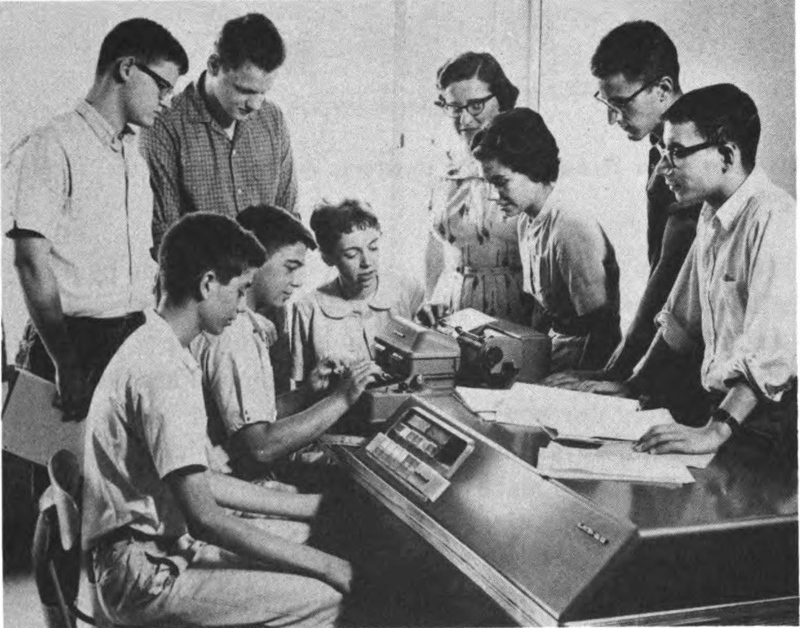

Royal McBee Corp.

Students at Staples High School, Westport, Connecticut, attend a summer session to learn the techniques of programming and operating an electronic computer.

The Saturday Evening Post

“Herbert’s been replaced by an electronic brain—one of the simpler types.”

Some years ago a group of experts predicted that by 1967 the world champion chess player would be an electronic computer. No one has yet claimed that we would have a president of metal and wire, but some interesting signposts are being put up. Computers are now used widely to predict the result of elections. Computers count the votes, and some have suggested that computers could make it possible for us to vote at home. The government is investigating the effectiveness of a decision-making computer as a stand-by aid for the President in this complex age we are moving into. No man has the ability to weigh every factor and to make decisions affecting the world. Perhaps a computer can serve in an advisory capacity to a president or to a World Council; perhaps—

17It is comforting to remember that men will always tell the computer what it is supposed to do. No computer will ever run the world any more than the cotton gin or the steam engine or television runs the world. And in an emergency, we can always pull out the wallplug, can’t we?

—James A. Garfield

Although it seemed to burst upon us suddenly, the jet airplane can trace its beginnings back through the fabric wings of the Wrights to the wax wings of Icarus and Daedalus, and the steam aerophile of Hero in ancient Greece. The same thing is true of the computer, the “thinking machine” we are just now becoming uncomfortably aware of. No brash upstart, it has a long and honorable history.

Naturalists tell us that man is not the only animal that counts. Birds, particularly, also have an idea of numbers. Birds, incidentally, use tools too. We seem to have done more with the discoveries than our feathered friends; at least no one has yet observed a robin with a slide rule or a snowy egret punching the controls of an electronic digital computer. However, the very notion of mere birds being tool and number users does give us an idea of the antiquity and lengthy heritage of the computer.

The computer was inevitable when man first began to make his own problems. When he lived as an animal, life was far simpler, and all he had to worry about was finding game and plants to eat, and keeping from being eaten or otherwise killed himself. But when he began to dabble in agriculture and the raising of flocks, when he began to think consciously and to reflect about things, man needed help.

19First came the hand tools that made him more powerful, the spears and bows and arrows and clubs that killed game and enemies. Then came the tools to aid his waking brain. Some 25,000 years ago, man began to count. This was no mean achievement, the dim, foggy dawning of the concept of number, perhaps in the caves in Europe where the walls have been found marked with realistic drawings of bison. Some budding mathematical genius in a skin garment only slightly shaggier than his mop of hair stared blinking at the drawings of two animals and then dropped his gaze to his two hands. A crude, tentative connection jelled in his inchoate gray matter and he shook his head as if it hurt. It was enough to hurt, this discovery of “number,” and perhaps this particular pioneer never again put two and two together. But others did; if not that year, the next.

Armed with his grasp of numbers, man didn’t need to draw two mastodons, or sheep, or whatever. Two pebbles would do, or two leaves or two sticks. He could count his children on his fingers—we retain the expression “a handful” to this day, though often our children are another sort of handful. Of course, the caveman did not of a sudden do sums and multiplications. When he began to write, perhaps 5,000 years later, he had formed the concept of “one,” “two,” “several,” and “many.”

Besides counting his flock and his children, and the number of the enemy, man had need for counting in another way. There were the seasons of the year, and a farmer or breeder had to have a way of reckoning the approach of new life. His calendar may well have been the first mathematical device sophisticated enough to be called a computer.

It was natural that numbers be associated with sex. The calendar was related to the seasons and the bearing of young. The number three, for example, took on mystic and potent connotation, representing as it did man’s genitals. Indeed, numbers themselves came quaintly to have sex. One, three, and the other odd numbers were male; the symmetrical, even numbers logically were female.

The notion that man used the decimal system because of his 20ten fingers and toes is general, but it was some time before this refinement took place. Some early peoples clung to a simpler system with a base of only two; and interestingly a tribe of Australian aborigines counts today thus: enea (1), petchaval (2), enea petchaval (3), petchaval petchaval (4). Before we look down our noses at this naïve system, let us consider that high-speed electronic computers use only two values, 1 and 0.

But slowly symbols evolved for more and more numbers, numbers that at first were fingers, and then perhaps knots tied in a strip of hide. This crude counting aid persists today, and cowboys sometimes keep rough tallies of a herd by knotting a string for every five that pass. Somehow numbers took on other meanings, like those that figure in courtship in certain Nigerian tribes. In their language, the number six also means “I love you.” If the African belle is of a mind when her boyfriend tenderly murmurs the magic number, she replies in like tone, “Eight!”, which means “I feel the same way!”

From the dawn of history there have apparently been two classes of us human beings, the “haves” and the “have nots.” Nowadays we get bills or statements from our creditors; in early days, when a slate or clay tablet was the document, a forerunner of the carbon copy or duplicate paper developed. Tallies were marked for the amount of the debt, the clay tablet was broken across the marks, and creditor and debtor each took half. No chance for cheating, since a broken half would fit only the proper mate!

Numbers at first applied only to discrete, or distinctly separate, things. The scratches on a calendar, the tallies signifying the count of a flock; these were more easily reckoned. The idea of another kind of number inspired the first clocks. Here was a monumental breakthrough in mathematics. Nature provided the sunrise that clearly marked the beginning of each day; man himself thought to break the day into “hours,” or parts of the whole. Such a division led eventually to measurement of size and weight. Now early man knew not only how many goats he had, but how many “hands” high they were, and how many 21“stones” they weighed. This further division ordained another kind of mechanical computer man must someday contrive—the analog.

The first counting machines used were pebbles or sea shells. For the Stone Age businessman to carry around a handful of rocks for all his transactions was at times awkward, and big deals may well have gone unconsummated for want of a stone. Then some genius hit on the idea of stringing shells on a bit of reed or hide; or more probably the necklace came first as adornment and the utilitarian spotted it after this style note had been added. At any rate, the portable adding machine became available and our early day accountant grew adroit at sliding the beads back and forth on the string. From here it was only a small step, taken perhaps as early as 3000 B.C., to the rigid counter known as the abacus.

The word “counter” is one we use in everyday conversation. We buy stock over the counter; some deals are under the counter. We all know what the counter itself is—that wide board that holds the cash register and separates us from the shopkeeper. At one time the cash register was the counter; actually the counting board had rods of beads like the abacus, or at least grooves in which beads could be moved. The totting up of a transaction was done on the “counter”; it is still there although we have forgotten whence came its name.

The most successful computer used for the next 5,000 years, the portable counter, or the abacus, is a masterpiece of simplicity and effectiveness. Though only a frame with several rows of beads, it is sophisticated enough that as late as 1947 Kiyoshi Matsuzake of the Japanese Ministry of Communications, armed with the Japanese version—a soroban, bested Private Tom Wood of the U. S. Army of Occupation punching the keys of an up-to-the-minute electric calculating machine in four of five problem categories! Only recently have Japanese banks gone over to modern calculators, and shopkeepers there and in other lands still conduct business by this rule of thumb and forefinger.

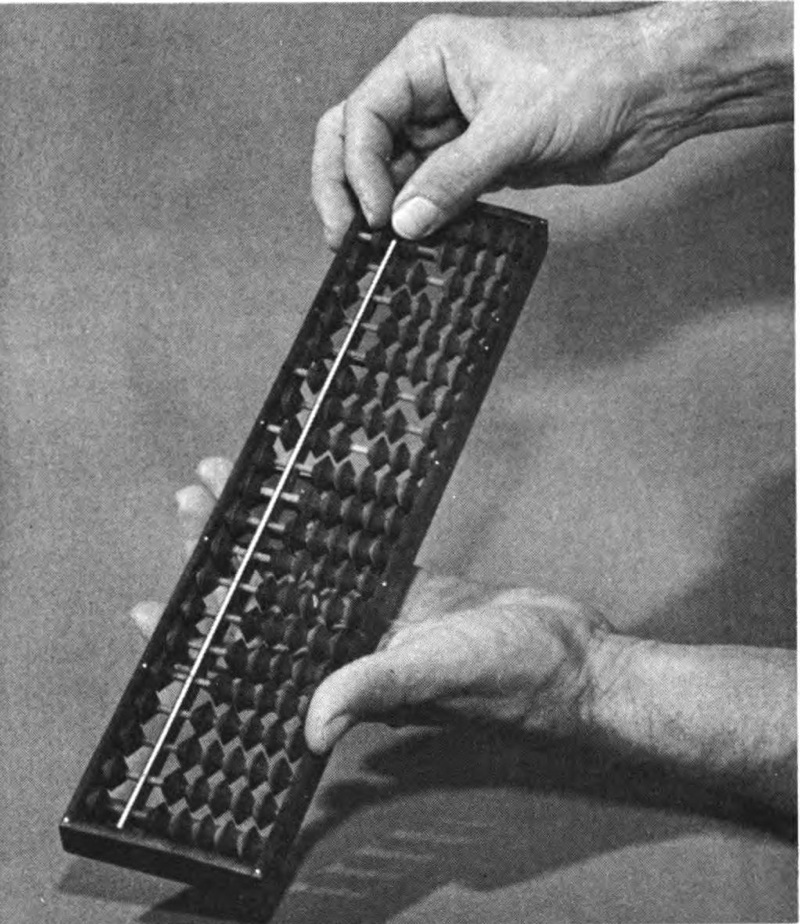

The abacus, ancient mechanical computer, is still in use in many parts of the world. Here is the Japanese version, the soroban, with problem being set up.

The name abacus comes to us by way of the Greek abax, 22meaning “dust.” Scholars infer that early sums were done schoolboy fashion in Greece with a stylus on a dusty slate, and that the word was carried over to the mechanical counter. The design has changed but little over the years and all abacuses bear a resemblance. The major difference is the number of beads on each row, determined by the mathematical base used in the particular country. Some in India, for example, were set up to handle pounds and shillings for use in shops. Others have a base of twelve. The majority, however, use the decimal system. Each 23row has seven beads, with a runner separating one or two beads from the others. Some systems use two beads on the narrow side, some only one; this is a mathematical consideration with political implications, incidentally: The Japanese soroban has the single-bead design; Korea’s son pan uses two. When Japan took over Korea the two-bead models were tabu, and went out of use until the Koreans were later able to win their independence again.

About the only thing added to the ancient abacus in recent years is a movable arrow for marking the decimal point. W. D. Loy patented such a gadget in the United States. Today the abacus remains a useful device, not only for business, but also for the teaching of mathematics to youngsters, who can literally “grasp their numbers.” For that reason it ought also to be helpful to the blind, and as a therapeutic aid for manual dexterity. Apparently caught up in the trend toward smaller computers, the abacus has been miniaturized to the extent that it can be worn as earrings or on a key chain.

Even with mechanical counters, early mathematicians needed written numbers. The caveman’s straight-line scratches gave way to hieroglyphics, to the Sumerian cuneiform “wedges,” to Roman numerals, and finally to Hindu and Arabic. Until the numbers, 1, 2, 3, 4, 5, 6, 7, 8, 9, and that most wonderful of all, 0 or zero, computations of any but the simplest type were apt to be laborious and time-consuming. Even though the Romans and Greeks had evolved a decimal system, their numbering was complex. To count to 999 in Greek required not ten numbers but twenty-seven. The Roman number for 888 was DCCCLXXXVIII. Multiplying CCXVII times XXIX yielded an answer of MMMMMMCCXCIII, to be sure, but not without some difficulty. It required an abacus to do any kind of multiplication or division.

Indeed, it was perhaps from the abacus that the clue to Arabic simplicity came. The Babylonians, antedating the Greeks, had nevertheless gone them one better in arithmetic by using a “place” system. In other words, the position of a number denoted its 24value. The Babylonians simply left an empty space between cuneiform number symbols to show an empty space in this positional system. Sometime prior to 300 B.C. a clever mathematician tired of losing track and punched a dot in his clay tablet to fill the empty space and avoid possible error.

The abacus shows these empty spaces on its rows of beads, too, and finally the Hindus combined their nine numerals with a “dot with a hole in it” and gave the mathematical world the zero. In Hindu it was sifr, corrupted to zephirium in Latin, and gives us today both cipher and zero. This enigma of nothingness would one day be used by Leibnitz to prove that God made the world; it would later become half the input of the electronic computer! Meantime, it was developed independently in various other parts of the world; the ancient Mayans being one example.

Impressed as we may be by an electronic computer, it may take some charity to recognize its forebears in the scratchings on a rock. To call the calendar a computer, we must in honesty add a qualifying term like “passive.” The same applies to the abacus despite its movable counters. But time, which produced the simple calendar, also furnished the incentive for the first “active” computers too. The hourglass is a primitive example, as is the sundial. Both had an input, a power source, and a readout. The clock interestingly ended up with not a decimal scheme, but one with a base of twelve. Early astronomers began conventionally bunching days into groups of ten, and located different stars on the horizon to mark the passage of the ten days. It was but a step from here to use these “decans,” as they were called, to further divide each night itself into segments. It turned out that 12 decans did the trick, and since symmetry was a virtue the daylight was similarly divided by twelve, giving us a day of 24 hours rather than 10 or 20.

From the simple hourglass and the more complex water clocks, the Greeks progressed to some truly remarkable celestial motion computers. One of these, built almost a hundred years before the birth of Christ, was recently found on the sea bottom off 25the Greek island of Antikythera. It had been aboard a ship which sank, and its discovery came as a surprise to scholars since history recorded no such complex devices for that era. The salvaged Greek computer was designed for astronomical work, showing locations of stars, predicting eclipses, and describing various cycles of heavenly bodies. Composed of dozens of gears, shafts, slip rings, and accurately inscribed plates, it was a computer in the best sense of the word and was not exceeded technically for many centuries.

The Greek engineer Vitruvius made an interesting observation when he said, “All machinery is generated by Nature and the revolution of the universe guides and controls. Our fathers took precedents from Nature—developed the comforts of life by their inventions. They rendered some things more convenient by machines and their revolutions.” Hindsight and language being what they are, today we can make a nice play on the word “revolution” as applied to the machine. The Antikythera computer was a prime example of what Vitruvius was talking about. Astronomy was such a complicated business that it was far simpler to make a model of the many motions rather than diagram them or try to retain them in his mind.

There were, of course, some die-hard classicists who decried the use of machines to do the work of pure reasoning. Archytas, who probably invented the screw—or at least discovered its mechanical principle—attempted to apply such mechanical devices to the solving of geometrical problems. For this he was taken to task by purist Plato who sought to preserve the distinct division between “mind” and “machine.”

Yet the syllogistic philosophers themselves, with their major premise, minor premise, and conclusion, were unwittingly setting the stage for a different kind of computer—the logic machine. Plato would be horrified today to see crude decks of cards, or simple electromechanical contrivances, solving problems of “reason” far faster than he could; in fact, as fast as the conditions could be set into them!

Aristotle fathered the syllogism, or at least was first to investigate it rigorously. He defined it as a formal argument in which the conclusion follows logically from the premises. There are four common statements of this type:

| All S (for subject) | is P (for predicate) |

| No S (for subject) | is P |

| Some S (for subject) | is P |

| Some S (for subject) | is not P |

Thus, Aristotle might say “All men are mortal” or “No men are immortal” as his subject. Adding an M (middle term), “Aristotle is a man,” as a minor premise, he could logically go on and conclude “Aristotle, being a man, is thus mortal.” Of course the syllogism unwisely used, as it often is, can lead to some ridiculously silly answers. “All tables have four legs. Two men have four legs. Thus, two men equal a table.”

Despite the weaknesses of the syllogism, nevertheless it led eventually to the science of symbolic logic. The pathway was circuitous, even devious at times, but slowly the idea of putting thought down as letters or numbers to be logically manipulated to reach proper conclusions gained force and credence. While the Greeks did not have the final say, they did have words for the subject as they did for nearly everything else.

Let us leave the subject of pure logic for a moment and talk of another kind of computing machine, that of the mechanical doer of work. In the Iliad, Homer has Hephaestus, the god of natural fire and metalworking, construct twenty three-wheeled chariots which propel themselves to and fro bringing back messages and instructions from the councils of the gods. These early automatons boasted pure gold wheels, and handles of “curious cunning.”

Man has apparently been a lazy cuss from the start and began straightway to dream of mechanical servants to do his chores. In an age of magic and fear of the supernatural his dreams 27were fraught with such machines that turned into evil monsters. The Hebrew “golem” was made in the shape of man, but without a soul, and often got out of hand. Literature has perpetuated the idea of machines running amok, as the broom in “The Sorcerer’s Apprentice,” but there have been benevolent machines too. Tik-Tok, a latter-day windup man in The Road to Oz, could think and talk and do many other things men could do. He was not alive, of course, but he had the saving grace of always doing just what he “was wound up to do.”

Having touched on the subject of mechanical men, let us now return to mechanical logic. Since the Greeks, many men have traveled the road of reason, but some stand out more brightly, more colorfully, than others. Such a standout was the Spanish monk Ramón Lull. Lull was born in 1232. A court page, he rose in influence, married young, and had two children, but did not settle down to married domesticity. A wildly reckless romantic, he was given to such stunts as galloping his horse into church in pursuit of some lady who caught his eye. One such escapade led to a remorseful re-examination of himself, and dramatic conversion to Christianity.

He began to write books in conventional praise of Christ, but early in his writings a preoccupation with numbers appears. His Book of Contemplation, for example, actually contains five books for the five wounds of the Saviour, and forty subdivisions for the days He spent in the wilderness. There are 365 chapters for daily reading, plus one for reading only in leap years! Each chapter has ten paragraphs, symbolizing the ten commandments, and three parts to each chapter. These multiplied give thirty, for the pieces of silver. Beside religious and mystical connotations, geometric terms are also used, and one interesting device is the symbolizing of words and even phrases by letters. This ties in neatly with syllogism. A sample follows:

… diversity is shown in the demonstration that the D makes of the E and the F and the G with the I and the K, therefore the H has certain scientific knowledge of Thy holy and glorious Trinity.

28This was only prologue to the Ars Magna, the “Great Art” of Ramón Lull. In 1274, the devout pilgrim climbed Mount Palma in search of divine help in his writings. The result was the first recorded attempt to use diagrams to discover and to prove non-mathematical truths. Specifically, Lull determined that he could construct mechanical devices that would perform logic to prove the validity of God’s word. Where force, in the shape of the Crusades, had failed, Lull was convinced that logical argument would win over the infidels, and he devoted his life to the task.

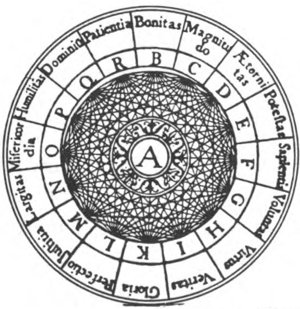

Renouncing his estate, including his wife and children, Lull devoted himself thenceforth solely to his Great Art. As a result of dreams he had on Mount Palma, the basis for this work was the assumption of simple premises or principles that are unquestionable. Lull arranged these premises on rotating concentric circles. The first of these wheels of logic was called A, standing for God. Arranged about the circumference of the wheel were sixteen other letters symbolizing attributes of God. The outer wheel also contained these letters. Rotating them produced 240 two-term combinations telling many things about God and His good. Other wheels prepared sermons, advised physicians and scientists, and even tackled such stumpers as “Where does the flame go when the candle is put out?”

From the Enciclopedia universal illustrada,

Barcelona, 1923

Lull’s wheel.

29Unfortunately for Lull, even divine help did not guarantee him success. He was stoned to death by infidels in Bugia, Africa, at the age of eighty-three. All his wheelspinning logic was to no avail in advancing the cause of Christianity there, and most mathematicians since have scoffed at his naïve devices as having no real merit. Far from accepting the Ars Magna, most scholars have been “Lulled into a secure sense of falsity,” finding it as specious as indiscriminate syllogism.

Yet Lull did leave his mark, and many copies of his wheels have been made and found useful. Where various permutations of numbers or other symbols are required, such a mechanical tool is often the fastest way of pairing them up. Even in the field of writing, a Lullian device was popular a few decades ago in the form of the “Plot Genii.” With this gadget the would-be author merely spun the wheels to match up various characters with interesting situations to arrive at story ideas. Other versions use cards to do the same job, and one called Plotto was used by its inventor William Wallace Cook to plot countless stories. Although these were perhaps not ideas for great literature, eager writers paid as much as $75 for the plot boiler.

Not all serious thinkers relegated Lull to the position of fanatic dreamer and gadgeteer. No less a mind that Gottfried Wilhelm von Leibnitz found much to laud in Lull’s works. The Ars Magna might well lead to a universal “algebra” of all knowledge, thought Leibnitz. “If controversies were to arise,” he then mused, “there would be no more reason for philosophers to dispute than there would for accountants!”

Leibnitz applied Lull’s work to formal logic, constructed tables of syllogisms from which he eliminated the false, and carried the work of the “gifted crank” at bit nearer to true symbolic logic. Leibnitz also extended the circle idea to that of overlapping them in early attempts at logical manipulation that foreshadowed the work that John Venn would do later. Leibnitz also saw in numbers a powerful argument for the existence of God. God, he saw as the numeral 1, and 0 was the nothingness from which He created the world. There are those, including Voltaire whose 30Candide satirized the notion, who question that it is the best of all possible worlds, but none can question that in the seventeenth century Leibnitz foresaw the coming power of the binary system. He also built arithmetical computers that could add and subtract, multiply and divide.

A few years earlier than Leibnitz, Blaise Pascal was also interested in computing machines. As a teen-ager working in his father’s tax office, Pascal wearied of adding the tedious figures so he built himself a gear-driven computer that would add eight columns of numbers. A tall figure in the scientific world, Pascal had fathered projective geometry at age sixteen and later established hydrodynamics as a science. To assist a gambler friend, he also developed the theory of probability which led to statistical science.

Another mathematical innovation of the century was that of placing logarithms on a stick by the Scot, John Napier. What he had done, of course, was to make an analog, or scale model of the arithmetical numbers. “Napier’s bones” quickly became what we now call slide rules, forerunners of a whole class of analog computers that solve problems by being actual models of size or quantity. Newton joined Leibnitz in contributing another valuable tool that would be used in the computer, that of the calculus.

Even as Plato had viewed with suspicion the infringement of mechanical devices on man’s domain of higher thought, other men have continued to eye the growth of “mechanisms” with mounting alarm. The scientist and inventor battled not merely technical difficulties, but the scornful satire and righteous condemnation of some of their fellow men. Jonathan Swift, the Irish satirist who took a swipe at many things that did not set well with his views, lambasted the computing machine as a substitute for the brain. In Chapter V, Book Three, of Gulliver’s Travels, the good dean runs up against a scheming scientist in Laputa:

31The first Professor I saw was in a very large Room, with Forty Pupils about him. After Salutation, observing me to look earnestly upon a Frame, which took up the greatest part of both the Length and Breadth of the Room; he said, perhaps I might wonder to see him employed in a Project for improving speculative knowledge by practical and mechanical Operations. But the World would soon be sensible of its Usefulness; and he flattered himself, that a more noble exalted Thought never sprang in any other Man’s Head. Every one knew how laborious the usual Method is of attaining to Arts and Sciences; whereas by his Contrivance, the most ignorant Person at a reasonable Charge, and with a little bodily Labour, may write Books in Philosophy, Poetry, Politicks, Law, Mathematicks, and Theology, without the least Assistance from Genius or Study. He then led me to the Frame, about the Sides whereof all his Pupils stood in Ranks. It was a Twenty Foot Square, placed in the Middle of the Room. The Superfices was composed of several Bits of Wood, about the Bigness of a Dye, but some larger than others. They were all linked together by slender Wires. These Bits of Wood were covered on every Square with Papers pasted on them; and on these Papers were written all the Words of their Language in their several Moods, Tenses, and Declensions, but without any Order. The Professor then desired me to observe, for he was going to set his Engine to work. The Pupils at his Command took each the hold of an Iron Handle, whereof there were Forty fixed round the Edges of the Frame; and giving them a sudden Turn, the whole Disposition of the Words was entirely changed. He then commanded Six and Thirty of the Lads to read the several Lines softly as they appeared upon the Frame; and where they found three or four Words together that might make Part of a Sentence, they dictated to the four remaining Boys who were Scribes. This work was repeated three or four Times, and at every Turn the Engine was so contrived, that the Words shifted into new Places, as the square Bits of Wood moved upside down.

Six hours a-day the young Students were employed in this Labour; and the Professor showed me several Volumes in large Folio already collected, of broken Sentences, which he intended to piece together, and out of those rich Materials to give the World a compleat Body of Art and Sciences; which however might be still improved, and much expedited, if the Publick would raise a Fund for making and employing five Hundred such Frames in Lagado....

32Fortunately for Swift, who would have been horrified by it, he never heard Russell Maloney’s classic story, “Inflexible Logic,” about six monkeys pounding away at typewriters and re-creating the world great literature. Gulliver’s Travels is not listed in their accomplishments.

The French Revolution prompted no less an orator than Edmund Burke to deliver in 1790 an address titled “Reflections on the French Revolution,” in which he extols the virtues of the dying feudal order in Europe. It galled Burke that “The Age of Chivalry is gone. That of sophists, economists, and calculators has succeeded, and the glory of Europe is extinguished forever.”

Seventy years later another eminent Englishman named Darwin published a book called On the Origin of Species that in the eyes of many readers did little to glorify man himself. Samuel Butler, better known for his novel, The Way of All Flesh, wrote too of the mechanical being, and was one of the first to point out just what sort of future Darwin was suggesting. In the satirical Erewhon, he described the machines of this mysterious land in some of the most prophetic writing that has been done on the subject. It was almost a hundred years ago that Butler wrote the first version, called “Darwin Among the Machines,” but the words ring like those of a 1962 worrier over the electronic brain. Butler’s character warns:

There is no security against the ultimate development of mechanical consciousness in the fact of machines possessing little consciousness now. Reflect upon the extraordinary advance which machines have made during the last few hundred years, and note how slowly the animal and vegetable kingdoms are advancing. The more highly organized machines are creatures not so much of yesterday, as of the last five minutes, so to speak, in comparison with past time.

Do not let me be misunderstood as living in fear of any actually existing machine; there is probably no known machine which is more than a prototype of future mechanical life. The present machines are to the future as the early Saurians to man ... what I fear is the extraordinary rapidity with which they are becoming something very different to what they are at present.

33Butler envisioned the day when the present rude cries with which machines call out to one another will have been developed to a speech as intricate as our own. After all, “... take man’s vaunted power of calculation. Have we not engines which can do all manner of sums more quickly and correctly than we can? What prizeman in Hypothetics at any of our Colleges of Unreason can compare with some of these machines in their own line?”

Noting another difference in man and his creation, Butler says,

... Our sum-engines never drop a figure, nor our looms a stitch; the machine is brisk and active, when the man is weary, it is clear-headed and collected, when the man is stupid and dull, it needs no slumber.... May not man himself become a sort of parasite upon the machines? An affectionate machine-tickling aphid?

It can be answered that even though machines should hear never so well and speak never so wisely, they will still always do the one or the other for our advantage, not their own; that man will be the ruling spirit and the machine the servant.... This is all very well. But the servant glides by imperceptible approaches into the master, and we have come to such a pass that, even now, man must suffer terribly on ceasing to benefit the machines. If all machines were to be annihilated ... man should be left as it were naked upon a desert island, we should become extinct in six weeks.

Is it not plain that the machines are gaining ground upon us, when we reflect on the increasing number of those who are bound down to them as slaves, and of those who devote their whole souls to the advancement of the mechanical kingdom?

Butler considers the argument that machines at least cannot copulate, since they have no reproductive system. “If this be taken to mean that they cannot marry, and that we are never likely to see a fertile union between two vapor-engines with the young ones playing about the door of the shed, however greatly we might desire to do so, I will readily grant it. [But] surely if a machine is able to reproduce another machine systematically, we may say that it has a reproductive system.”

Butler repeats his main theme. “... his [man’s] organization 34never advanced with anything like the rapidity with which that of the machine is advancing. This is the most alarming feature of the case, and I must be pardoned for insisting on it so frequently.”

Then there is a startlingly clear vision of the machines “regarded as a part of man’s own physical nature, being really nothing but extra-corporeal limbs. Man ... as a machinate mammal.” This was feared as leading to eventual weakness of man until we finally found “man himself being nothing but soul and mechanism, an intelligent but passionless principle of mechanical action.” And so the Erewhonians in self-defense destroyed all inventions discovered in the preceding 271 years!

During the nineteenth century, weaving was one of the most competitive industries in Europe, and new inventions were often closely guarded secrets. Just such an idea was that of Frenchman Joseph M. Jacquard, an idea that automated the loom and would later become the basis for the first modern computers. A big problem in weaving was how to control a multiplicity of flying needles to create the desired pattern in the material. There were ways of doing this, of course, but all of them were unwieldy and costly. Then Jacquard hit on a clever scheme. If he took a card and punched holes in it where he wanted the needles to be actuated, it was simple to make the needles do his bidding. To change the pattern took only another card, and cards were cheap. Patented in 1801, there were soon thousands of Jacquard looms in operation, doing beautiful and accurate designs at a reasonable price.

To show off the scope of his wonderful punched cards, Jacquard had one of his looms weave a portrait of him in silk. The job took 20,000 cards, but it was a beautiful and effective testimonial. And fatefully a copy of the silk portrait would later find its way into the hands of a man who would do much more with the oddly punched cards.

35At about this same time, a Hungarian named Wolfgang von Kempelen decided that machines could play games as well as work in factories. So von Kempelen built himself a chess-playing machine called the Maelzel Chess Automaton with which he toured Europe. The inventor and his machine played a great game, but they didn’t play fair. Hidden in the innards of the Maelzel Automaton was a second human player, but this disillusioning truth was not known for some time. Thus von Kempelen doubtless spurred other inventors to the task, and in a short while machines would actually begin to play the royal game. For instance, a Spaniard named L. Torres y Quevedo built a chess-playing machine in 1914. This device played a fair “end game” using several pieces, and its inventor predicted future work in this direction using more advanced machines.

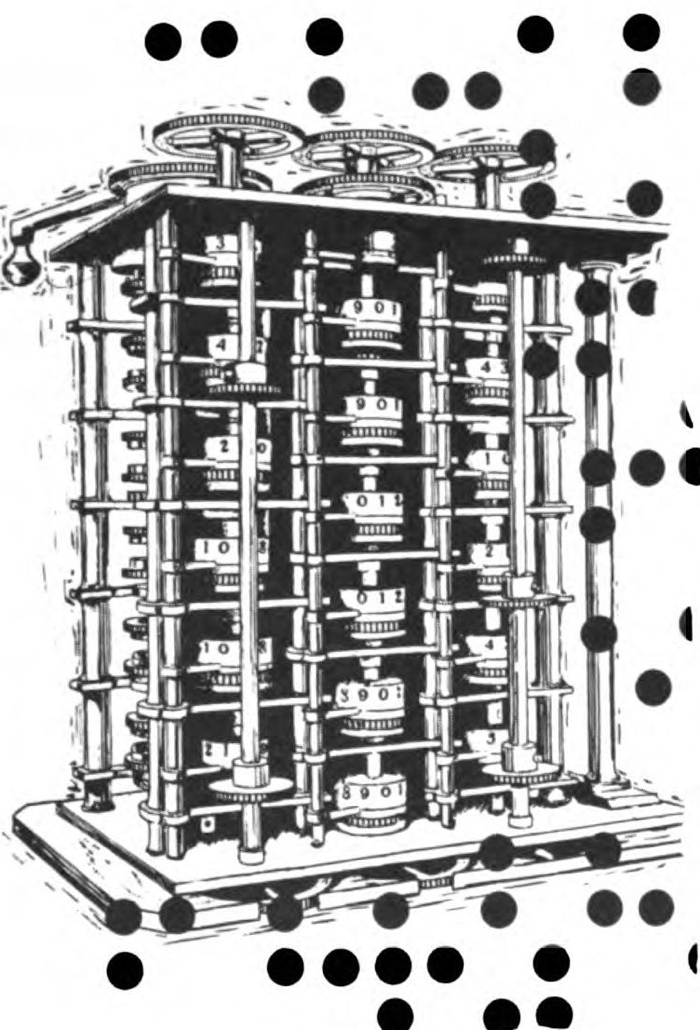

Charles Babbage was an English scientist with a burning desire for accuracy. When some mathematical tables prepared for the Astronomical Society proved to be full of errors, he angrily determined to build a machine that would do the job with no mistakes. Of course calculating machines had been built before; but the machine Babbage had in mind was different. In fact, he called it a “difference engine” because it was based on the difference tables of the squares of numbers. The first of the “giant computers,” it was to have hundreds of gears and shafts, ratchets and counters. Any arithmetic problem could be set into it, and when the proper cranks were turned, out would come an answer—the right answer because the machine could not make a mistake. After doing some preliminary work on his difference engine, Babbage interested the government in his project since even though he was fairly well-to-do he realized it would cost more money than he could afford to sink into the project. Babbage was a respected scientist, Lucasian Professor of Mathematics at Cambridge, and because of his reputation and the promise of the machine, the Chancellor of the Exchequer promised to underwrite the project.

For four years Babbage and his mechanics toiled. Instead of completing his original idea, the scientist had succeeded only 36in designing a far more complicated machine, one which would when finished weigh about two tons. Because the parts he needed were advanced beyond the state of the art of metalworking, Babbage was forced to design and build them himself. In the process he decided that industry was being run all wrong, and took time out to write a book. It was an excellent book, a sort of forerunner to the modern science of operations research, and Babbage’s machine shop was doing wonders for the metalworking art.

Undaunted by the lack of progress toward a concrete result, Babbage was thinking bigger and bigger. He was going to scrap the difference engine, or rather put it in a museum, and build a far better computer—an “analytical engine.” If Jacquard’s punched cards could control the needles on a loom, they could also operate the gears and other parts of a calculating machine. This new engine would be one that could not only add, subtract, multiply, and divide; it would be designed to control itself. And as the answers started to come out, they would be fed back to do more complex problems with no further work on the operator’s part. “Having the machine eat its own tail!” Babbage called this sophisticated bit of programming. This mechanical cannibalism was the root of the “feedback” principle widely used in machines today. Echoing Watt’s steam governor, it prophesied the coming control of machines by the machines themselves. Besides this innovation, the machine would have a “store,” or memory, of one thousand fifty-digit numbers that it could draw on, and it would actually exercise judgment in selection of the proper numbers. And as if that weren’t enough, it would print out the correct answers automatically on specially engraved copper plates!

Space Technology Laboratories

“As soon as an Analytical Engine exists, it will necessarily guide the future course of science. Whenever any result is sought by its aid, the question will then arise—by what course of calculation can these results be arrived at by the machine in the shortest time?” Charles Babbage—The Life of a Philosopher, 1861.

It was a wonderful dream; a dream that might have become an actuality in Babbage’s own time if machine technology had been as advanced as his ideas. But for Babbage it remained only a dream, a dream that never did work successfully. The government spent £17,000, a huge sum for that day and time, and bowed out. Babbage fumed and then put his own money into 37the machine. His mechanics left him and became leaders in the machine-tool field, having trained in Babbage’s workshops. In despair, he gave up on the analytical engine and designed another difference engine. An early model of this one would work to five accurate places, but Babbage had his eyes on a much better goal—twenty-place accuracy. A lesser man would have aimed more realistically and perhaps delivered workable computers 38to the mathematicians and businessmen of the day. There is a legend that his son did finish one of the simpler machines and that it was used in actuarial accounting for many years. But Babbage himself died in 1871 unaware of how much he had done for the computer technology that would begin to flower a few short decades later.

Singlehandedly he had given the computer art the idea of programming and of sequential control, a memory in addition to the arithmetic unit he called a “mill,” and even an automatic readout such as is now standard on modern computers. Truly, the modern computer was “Babbage’s dream come true.”

Concurrently with the great strides being made with mechanical computers that could handle mathematics, much work was also being done with the formalizing of the logic. As hinted vaguely in the syllogisms of the early philosophers, thinking did seem amenable to being diagrammed, much like grammar. Augustus De Morgan devised numerical logic systems, and George Boole set up the logic system that has come to be known as Boolean algebra in which reasoning becomes positive or negative terms that can be manipulated algebraically to give valid answers.

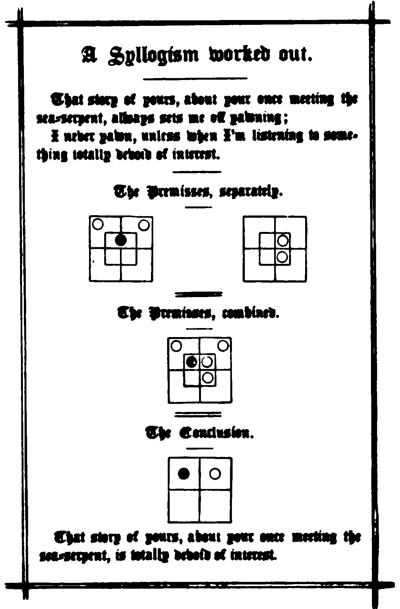

John Venn put the idea of logic into pictures, and simple pictures at that. His symbology looks for all the world like the three interlocking rings of a well-known ale. These rings stand for the subject, midterm, and predicate of the older Aristotelian syllogism. By shading the various circles according to the major and minor premises, the user of Venn circles can see the logical result by inspection. Implicit in the scheme is the possibility of a mechanical or electrical analogy to this visual method, and it was not long until mathematicians began at least on the mechanical kind. Among these early logic mechanizers, surprisingly, was Lewis Carroll who of course was mathematician Charles L. Dodgson before he became a writer.

39Carroll, who was a far busier man than most of us ever guess, marketed a “Game of Logic,” with a board and colored cardboard counters that handled problems like the following:

By arranging the counters on Carroll’s game board so that: All M are X, and No Y is not-M, we learn that No Y is not-X! This tells the initiate logician that no nightingale dislikes sugar; a handy piece of information for bird-fancier and sugar-broker alike.

Lewis Carroll’s “Symbolic Logic.”

Charles, the third Earl Stanhope, was only slightly less controversial than his prime minister, William Pitt. Scientifically he was far out too, writing books on electrical theory, inventing steamboats, microscopes, and printing presses among an odd 40variety of projects; he also became interested in mechanical logic and designed the “Stanhope Demonstrator,” a contrivance like a checkerboard with sliding panels. By properly manipulating the demonstrator he could solve such problems as:

What are the minimum and maximum number of bright boys? A simple sliding of scales on the Stanhope Demonstrator shows that two must be boys and as many as four may be. This clever device could also work out probability problems such as how many heads and tails will come up in so many tosses of a coin.

In 1869 William S. Jevons, an English economist and expert logician, built a logic machine. His was not the first, of course, but it had a unique distinction in that it solved problems faster than the human brain could! Using Boolean algebra principles, he built a “logical abacus” and then even a “logical piano.” By simply pressing the keys of this machine, the user could make the answer appear on its face. It is of interest that Jevons thought his machine of no practical use, since complex logical questions seldom arose in everyday life! Life, it seems, was simpler in 1869 than it is today, and we should be grateful that Jevons pursued his work through sheer scientific interest.

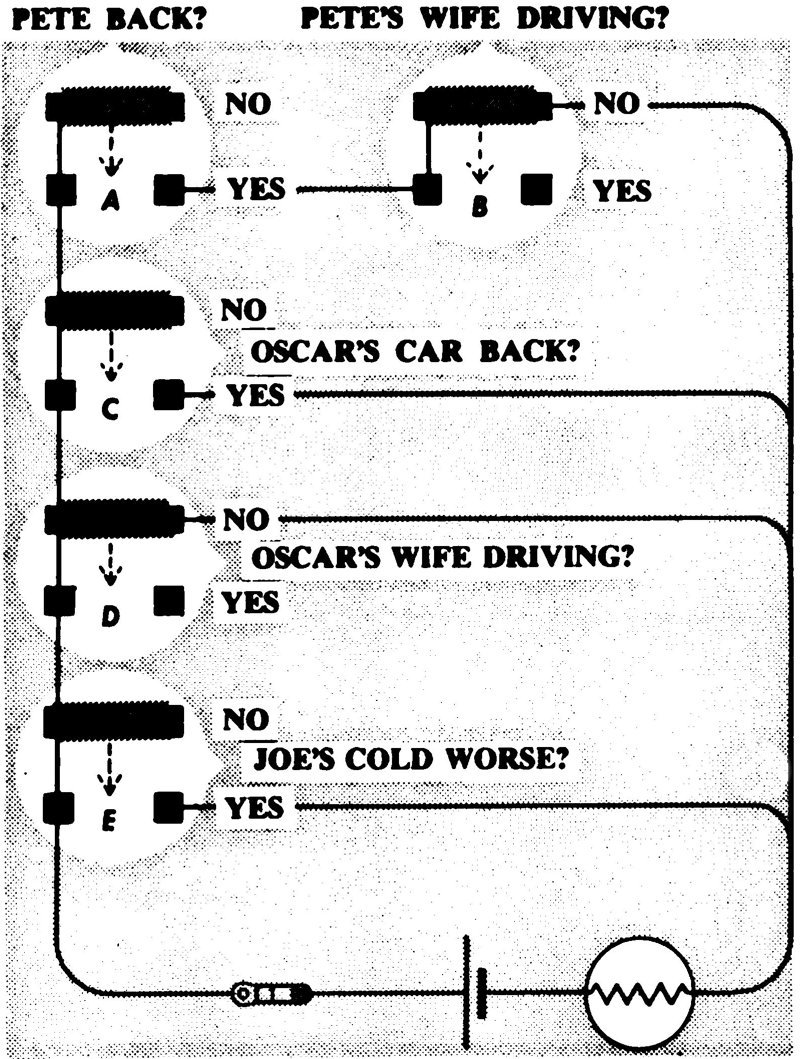

More sophisticated than the Jevons piano, the logic machine invented in America by Allan Marquand could handle four terms and do problems like the following:

The machine is smart enough to tell us that when Dora is at home the other three girls are all at home or out. The same thing is true when Dora is out.

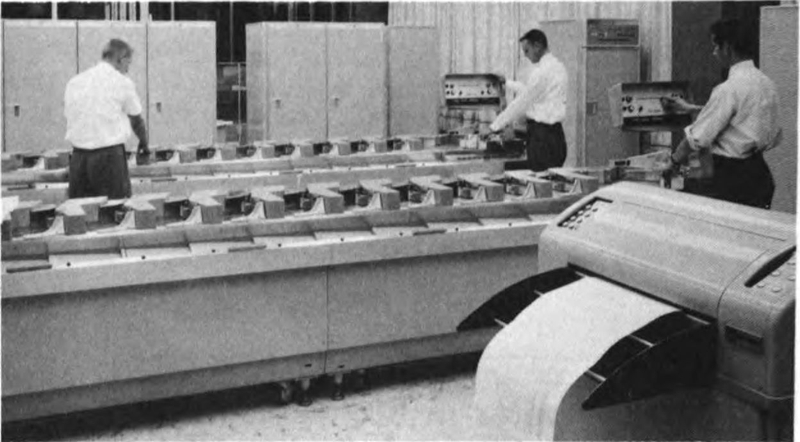

Moving from the sophistication of such logic devices, we find a tremendous advance in mechanical computers spurred by such a mundane chore as the census. The 1880 United States census required seven years for compiling; and that with only 50 million heads to reckon. It was plain to see that shortly a ten-year census would be impossible of completion unless something were done to cut the birth rate or speed the counting. Dr. Herman Hollerith was the man who did something about it, and as a result the 1890 census, with 62 million people counted, took only one-third the time of the previous tally.

Hollerith, a statistician living in Buffalo, New York, may or may not have heard the old saw about statistics being able to support anything—including the statisticians, but there was a challenge in the rapid growth of population that appealed to the inventor in him and he set to work. He came up with a card punched with coded holes, a card much like that used by Jacquard on his looms, and by Babbage on the dream computer that became a nightmare. But Hollerith did not meet the fate of his predecessors. Not stoned, or doomed to die a failure, Hollerith built his card machines and contracted with the government to do the census work. “It was a good paying business,” he said. It was indeed, and his early census cards would some day be known generically as “IBM cards.”

While Jacquard and Babbage of necessity used mechanical devices with their punched cards, Hollerith added the magic of electricity to his card machine, building in essence the first electrical computing machine. The punched cards were floated across a pool of mercury, and telescoping pins in the reading head dropped through the holes. As they contacted the mercury, an electrical circuit was made and another American counted. Hollerith did not stop with census work. Sagely he felt there must be commercial applications for his machines and sold two of the leading railroads on a punched-card accounting system. His firm merged with others to become the Computing-Tabulating-Recording 42 Company, and finally International Business Machines. The term “Hollerith Coding” is still familiar today.

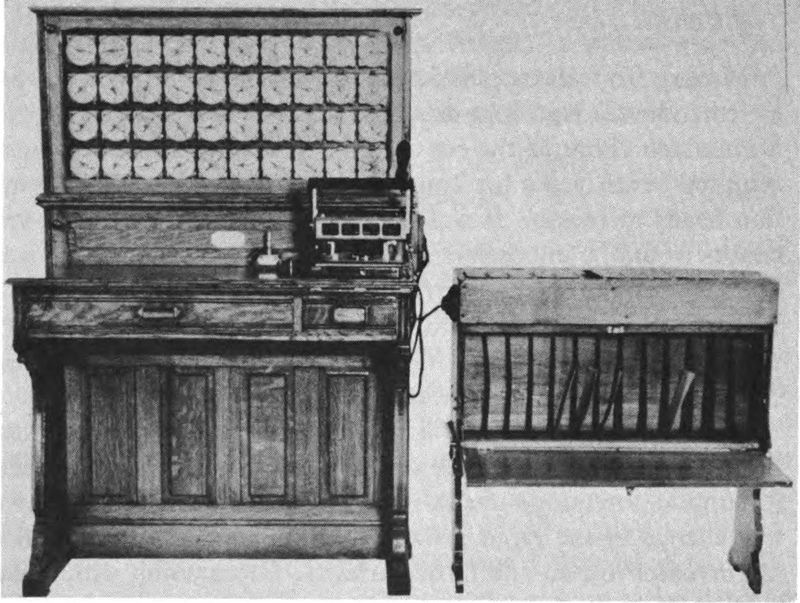

International Business Machines Corp.

Hollerith tabulating machine of 1890, forerunner of modern computers.

Edison was illuminating the world and the same electrical power was brightening the future of computing machines. As early as 1915 the Ford Instrument Company was producing in quantity a device known as “Range Keeper Mark I,” thought to be the first electrical-analog computer. In 1920, General Electric built a “short-circuit calculating board” that was an analog or model of the real circuits being tested. Westinghouse came up with an “alternating-current network analyzer” in 1933, and this analog computer was found to be a powerful tool for mathematics.

International Business Machines Corp.

A vertical punched-card sorter used in 1908.

While scientists were putting the machines to work, writers continued to prophesy doom when the mechanical man took over. Mary W. Shelley’s Frankenstein created a monster from 43a human body; a monster that in time would take his master’s name and father a long horrid line of other fictional monsters. Ambrose G. Bierce wrote of a diabolical chess-playing machine that was human enough to throttle the man who beat him at a game. But it remained for the Czech playwright Karel Čapek to give the world the name that has stuck to the mechanical man. 44In Čapek’s 1921 play, R.U.R., for Rossum’s Universal Robots, we are introduced to humanlike workers grown in vats of synthetic protoplasm. Robota is a Czech word meaning compulsory service, and apparently these mechanical slaves did not take to servitude, turning on their masters and killing them. Robot is generally accepted now to mean a mobile thinking machine capable of action. Before the advent of the high-speed electronic computer it had little likelihood of stepping out of the pages of a novel or movie script.

As early as 1885, Allan Marquand had proposed an electrical logic machine as an improvement over his simple mechanically operated model, but it was 1936 before such a device was actually built. In that year Benjamin Burack, a member of Chicago’s Roosevelt College psychology department, built and demonstrated his “Electrical Logic Machine.” Able to test all syllogisms, the Burack machine was unique in another respect. It was the first of the portable electrical computers.

The compatibility of symbolic logic and electrical network theory was becoming evident at about this time. The idea that yes-no corresponded to on-off was beautifully simple, and in 1938 there appeared in one of the learned journals what may fairly be called a historic paper. Appearing in Transactions of the American Institute of Electrical Engineers, “A Symbolic Analysis of Relay and Switching Circuits,” was written by Claude Shannon and was based on his thesis for the M.S. degree at the Massachusetts Institute of Technology a year earlier. One of its important implications was that the programming of a computer was more a logical than an arithmetical operation. Shannon had laid the groundwork for logical computer design; his work made it possible to teach the machine not only to add but also to think. Another monumental piece of work by Shannon was that on information theory, which revolutionized the science of communications. The author is now on the staff of the electronics research laboratory at M.I.T.

Two enterprising Harvard undergraduates put Shannon’s ideas to work on their problems in the symbolic logic class they 45were taking. Called a Kalin-Burkhart machine for its builders, this electrical logic machine did indeed work, solving the students’ homework assignments and saving them much tedious paperwork. Interestingly, when certain logical questions were posed for the machine, its circuits went into oscillation, making “a hell of a racket” in its frustration. The builders called this an example of “Russell’s paradox.” A typical logical paradox is that of the barber who shaved all men who didn’t shave themselves—who shaves the barber? Or of the condemned man permitted to make a last statement. If the statement is true, he will be beheaded; if false, he will hang. The man says, “I shall be hanged,” and thus confounds his executioners as well as logic, since if he is hanged, the statement is indeed true, and he should have been beheaded. If he is beheaded, the statement is false, and he should have been hanged instead.

World War II, with its pressingly complex technological problems, spurred computer work mightily. Men like Vannevar Bush, then at Harvard, produced analog computers called “differential analyzers” which were useful in solving mathematics involved in design of aircraft and in ballistics problems.

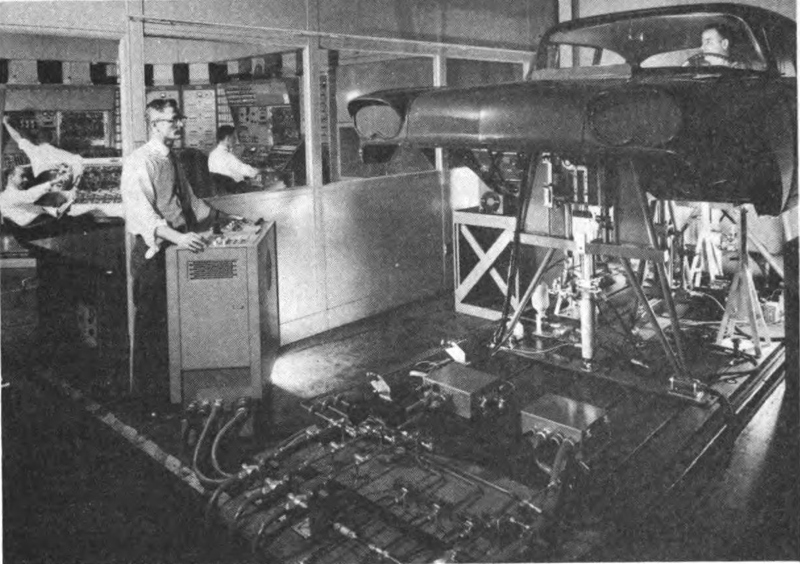

A computer built by General Electric for the gunsights on the World War II B-29 bomber is typical of applications of analog devices for computing and predicting, and is also an example of early airborne use of computing devices. Most computers, however, were sizable affairs. One early General Electric analog machine, described as a hundred feet long, indicates the trend toward the “giant brain” concept.

Even with the sophistication attained, these computers were hardly more than extensions of mechanical forerunners. In other words, gears and cams properly proportioned and actuated gave the proper answers whether they were turned by a manual crank or an electrical motor. The digital computer, which had somehow been lost in the shuffle of interest in computers, was now appearing on the scientific horizon, however, and in this machine would flower all the gains in computers from the abacus to electrical logic machines.

Many men worked on the digital concept. Aiken, who built the electromechanical Mark I at Harvard, and Williams in England are representative. But two scientists at the University of Pennsylvania get the credit for the world’s first electronic digital computer, ENIAC, a 30-ton, 150-kilowatt machine using vacuum tubes and semiconductor diodes and handling discrete numbers instead of continuous values as in the analog machine. The modern computer dates from ENIAC, Electronic Numerical Integrator And Computer.

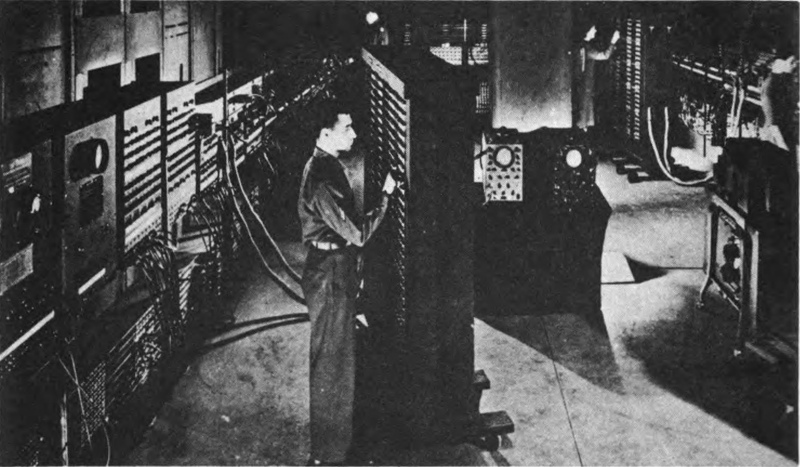

Remington Rand UNIVAC

ENIAC in operation. This was the first electronic digital computer.

Shannon’s work and the thinking of others in the field indicated the power of the digital, yes-no, approach. A single switch can only be on or off, but many such switches properly interconnected can do amazing things. At first these switches were 47electromechanical; in the Eckert-Mauchly ENIAC, completed for the government in 1946, vacuum tubes in the Eccles-Jordan “flip-flop” circuit married electronics and the computer. The progeny have been many, and their generations faster than those of man. ENIAC has been followed by BINAC and MANIAC, and even JOHNNIAC. UNIVAC and RECOMP and STRETCH and LARC and a whole host of other machines have been produced. At the start of 1962 there were some 6,000 electronic digital computers in service; by year’s end there will be 8,000. The golden age of the computer may be here, but as we have seen, it did not come overnight. The revolution has been slow, gathering early momentum with the golden wheels of Homer’s mechanical information-seeking vehicles that brought the word from the gods. Where it goes from here depends on us, and maybe on the computer itself.

—John Weiss

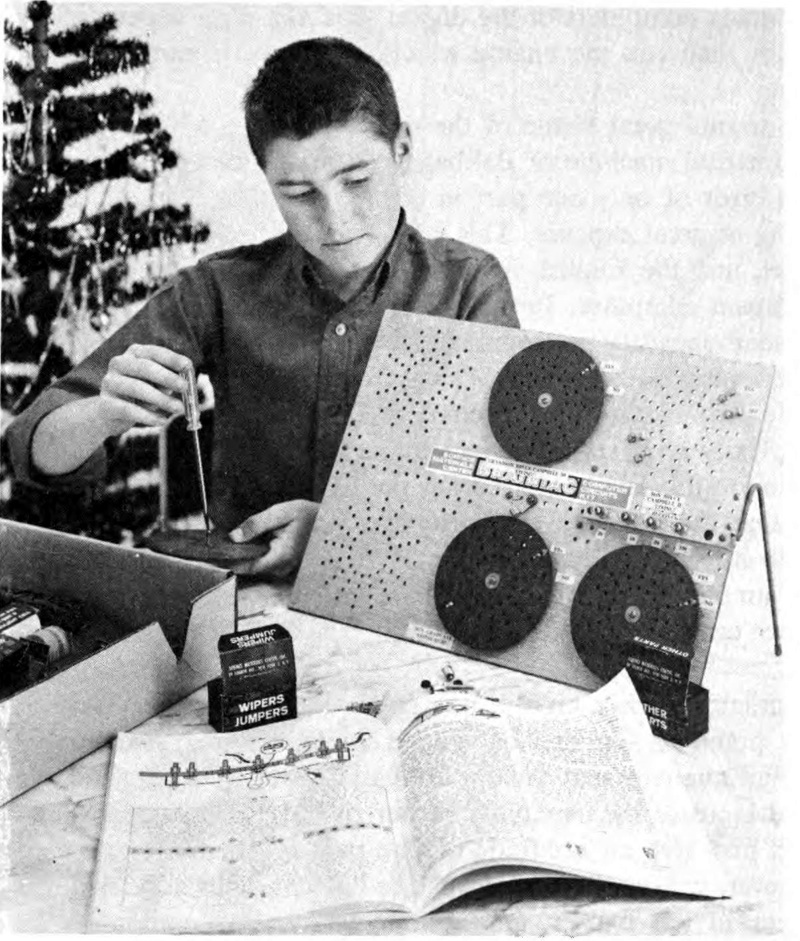

In the past decade or so, an amazing and confusing number of computing machines have developed. To those of us unfamiliar with the beast, many of them do not look at all like what we imagined computers to be; others are even more awesome than the wildest science-fiction writer could dream up. On the more complex, lights flash, tape reels spin dizzily, and printers clatter at mile-a-minute speeds. We are aware, or perhaps just take on faith, that the electronic marvel is doing its sums at so many thousand or million per second, cranking out mathematical proofs and processing data at a rate to make mere man seem like the dullest slowpoke. Just how computers do this is pretty much of a mystery unless we are of the breed that works with them. Actually, in spite of all the blurring speed and seeming magic, the basic steps of computer operation are quite simple and generally the same for all types of machines from the modestly priced electromechanical do-it-yourself model to STRETCH, MUSE, and other ten-million-dollar computers.